In recent years, large language models (LLMs) have revolutionized the field of natural language processing (NLP), demonstrating unprecedented capabilities in generating human-like text. Among the most exciting advancements are zero-shot and few-shot learning, which enable these models to perform tasks with little to no specific training data.

This blog explores the mechanisms behind zero-shot and few-shot learning, their practical applications, and the challenges and future directions of this groundbreaking technology.

Understanding Zero-Shot and Few-Shot Learning

Zero-shot Learning

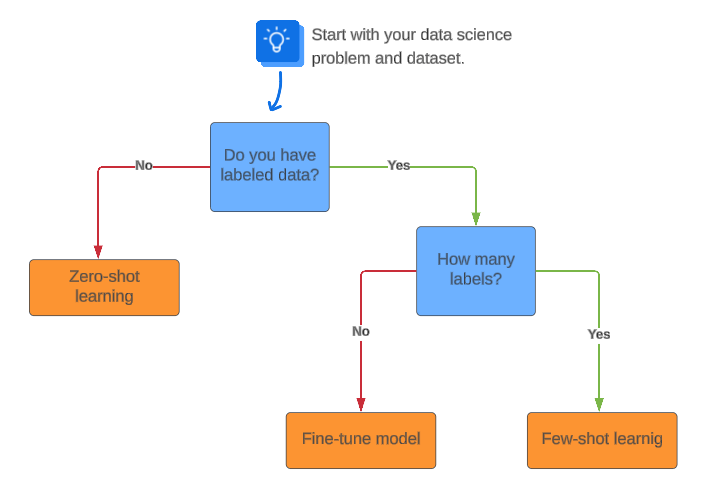

Zero-shot learning allows a model to perform a task it has never seen before without any task-specific training. For example, an LLM might generate a poem or answer a question about a new topic purely based on its understanding of language and context learned during pre-training.

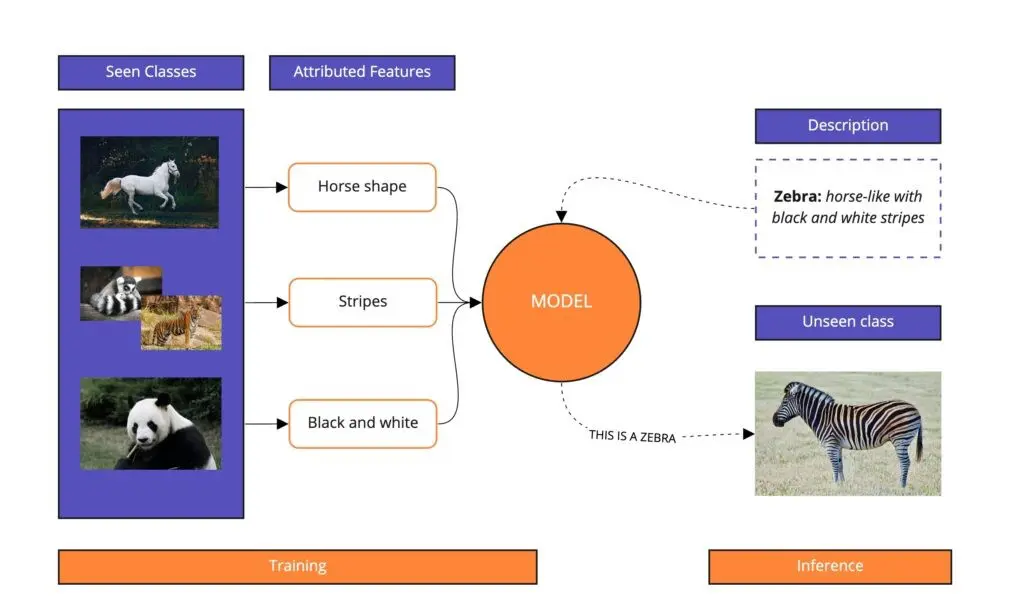

The image illustrates the concept of zero-shot learning, where a model can identify an unseen class based on attributes learned from seen classes. In the training phase, the model learns various features from known classes (e.g., a horse for its shape, a tiger for its stripes, and a panda for its black and white coloring). During inference, the model uses these learned attributes to identify an unseen class, in this case, a zebra, by recognizing it as a horse-like animal with black and white stripes. This demonstrates how zero-shot learning enables models to generalize from learned features to accurately classify previously unseen data.

Few-shot learning

On the other hand, involves providing the model with a small number of examples to learn from. This minimal training helps the model to adapt to new tasks quickly. For instance, showing a model a few instances of how to translate a sentence from English to Spanish enables it to generalize and perform the translation on new sentences.

Historical Context and Evolution

Historically, machine learning models required extensive training on large, labeled datasets to perform specific tasks. The emergence of LLMs like OpenAI’s GPT-3 and GPT-4 marked a significant shift. These models, trained on diverse datasets encompassing a wide range of topics, exhibited the ability to generalize from very few examples, sparking a new era in AI development.

Mechanisms Enabling Zero-Shot and Few-Shot Learning

Role of Transformers and Attention Mechanisms

The architecture of transformers is at the heart of LLMs’ capabilities. Transformers use self-attention mechanisms to weigh the importance of different words in a sentence, allowing the model to understand context and relationships between words effectively. This architecture enables LLMs to generate coherent and contextually appropriate text even in zero-shot and few-shot scenarios.

from transformers import GPT2Tokenizer, GPT2Model

# Initialize the tokenizer and model

tokenizer = GPT2Tokenizer.from_pretrained('gpt2')

model = GPT2Model.from_pretrained('gpt2')

# Tokenize input text

input_text = "Explain the concept of zero-shot learning."

input_ids = tokenizer.encode(input_text, return_tensors='pt')

# Generate output

outputs = model(input_ids) Prompt-Based Learning Techniques

Prompt engineering is crucial in zero-shot and few-shot learning. By crafting specific and clear prompts, users can guide LLMs to produce desired outputs. For example, a well-designed prompt can instruct the model to write a news article, generate a summary, or answer a complex question accurately.

Meta-Learning Strategies

Meta-learning, or “learning to learn,” is another key strategy. It involves training models on a variety of tasks so they can quickly adapt to new ones with minimal data. This approach leverages the generalization abilities of LLMs, allowing them to perform well in zero-shot and few-shot settings.

Practical Applications

Case Studies and Real-World Examples

- OpenAI’s GPT-3 and GPT-4: These models have demonstrated remarkable zero-shot and few-shot learning capabilities across diverse tasks, from writing essays and creating code to answering questions and generating creative content.

- Healthcare: In medical research, LLMs can summarize vast amounts of medical literature or generate patient-specific treatment plans with minimal input.

- Finance: Financial analysts use LLMs for market analysis and report generation, significantly reducing the time and effort required to produce comprehensive reports.

Industry-Specific Applications

- Customer Service: LLMs can handle customer queries and provide support with minimal training data, improving response times and customer satisfaction.

- Education: Personalized tutoring systems can generate customized learning materials and explanations based on a few examples provided by educators.

Comparative Analysis

Zero-Shot vs. Few-Shot vs. Traditional Supervised Learning

| Aspect | Zero-Shot Learning | Few-Shot Learning | Traditional Supervised Learning |

| Data Requirement | None | Minimal | Large labeled dataset |

| Adaptability | High | Moderate | Low |

| Training Time | Very Low | Low | High |

| Flexibility | Very High | High | Low |

Benefits and Limitations of Each Approach

Zero-Shot Learning:

- Benefits: No need for task-specific training data, highly flexible.

- Limitations: May produce less accurate results for highly specialized tasks.

Few-Shot Learning:

- Benefits: Requires minimal data, quickly adapts to new tasks.

- Limitations: Performance depends on the quality and relevance of the provided examples.

Traditional Supervised Learning:

- Benefits: High accuracy for well-defined tasks with abundant data.

- Limitations: Requires extensive labeled data, less adaptable to new tasks.

Challenges and Limitations

Common Pitfalls and Issues

- Ambiguity in Prompts: Poorly crafted prompts can lead to ambiguous or irrelevant outputs.

- Bias and Ethical Concerns: LLMs can reflect biases present in their training data, leading to ethical issues.

- Generalization Limits: While LLMs are powerful, they can struggle with tasks that require deep domain-specific knowledge.

Addressing Bias and Ethical Concerns

Efforts to mitigate biases include refining training datasets, developing bias detection and correction techniques, and implementing ethical guidelines for AI usage.

Data Requirements and Quality

Even in zero-shot and few-shot scenarios, the quality of the initial training data and the few examples provided are critical. Ensuring high-quality, diverse data helps improve model performance and generalization.

Future Directions

Emerging Research and Potential Advancements

Research is ongoing to enhance the capabilities of LLMs further. Innovations in prompt engineering, improved training techniques, and hybrid models combining multiple AI approaches are areas of active development.

Integration with Other AI Technologies

Combining LLMs with other AI technologies, such as reinforcement learning and computer vision, can create more robust and versatile AI systems.

Prospects for Broader Adoption

As zero-shot and few-shot learning techniques mature, they will likely see broader adoption across various industries, driving efficiency and innovation.

Zero-shot and few-shot learning represent significant advancements in the field of AI, enabling large language models to perform a wide range of tasks with minimal data. By understanding and leveraging these capabilities, industries can unlock new possibilities and improve efficiency. As research and development continue, the potential for zero-shot and few-shot learning will only grow, shaping the future of AI and its applications.