GPT-4 to LLaMA2 on AWS migration for Enterprise SaaS Analytics

Business Problem

About Lyzr.ai

Lyzr is an enterprise Generative AI company that offers private and secure AI Agent SDKs and an AI Management System. Lyzr helps enterprises build, launch and manage secure GenAI applications, in their AWS cloud or on-prem infra. No more sharing sensitive data with SaaS platforms or GenAI wrappers. And no more reliability and integration issues of open-source tools.

Solution

Post evaluation by the GoML Data Scientists, LLaMA2 was the target LLM for migration, which met the above-mentioned criteria. The migration process involved transitioning the existing chatbot system from using ChatGPT’s capabilities by leveraging the enhanced features of LLaMA2.

The overall scope identified by the goML’s GenAI team was broken into 3 parts:

Migrating to LLaMA2

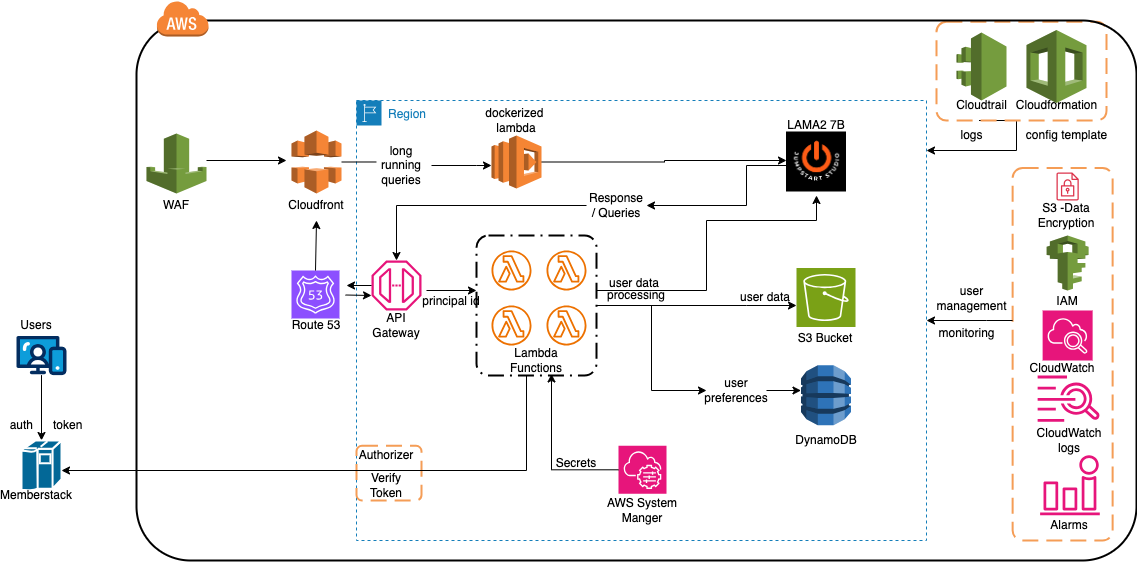

The migration of core components from GPT-4 to a hosted version of LLaMA2 involved transitioning the existing chatbot system to leverage LLaMA2’s enhanced features. This was executed seamlessly by hosting the new system natively on AWS with a serverless architecture, utilizing Lambda-based microservices for improved efficiency and scalability.

Fine-tuning LLaMA2-7B

The 7B version of the LLaMA2 model underwent instruct tuning to enhance its capabilities, ensuring it meets or exceeds the performance of existing OpenAI models. This process involved refining the model for accuracy and high-quality output tailored to the client’s needs.

Optimizing LLaMA2 Performance

The LLaMA2 model was fine-tuned to ensure its output accuracy and performance matched or exceeded that of existing OpenAI models. This optimization guaranteed high-quality, reliable responses from the chatbot system.

There was a need for fine-tuning the LLaMA2-7b chat open-source model & the entire platform was designed to be hosted natively on AWS using a serverless architecture, specifically employing Lambda-based microservices.