Generative AI has revolutionized content creation across various domains, from art and entertainment to education and marketing. However, it has also introduced challenges, particularly in the form of deepfakes—highly realistic but fake digital media that can deceive audiences and undermine trust. As these technologies become more advanced, ensuring media integrity has become a critical concern. This article delves into the technical aspects of how generative AI can be harnessed to preserve media integrity, focusing on detection mechanisms, real-time verification, and the integration of blockchain technology.

Understanding the Threat: Deepfakes and Synthetic Media

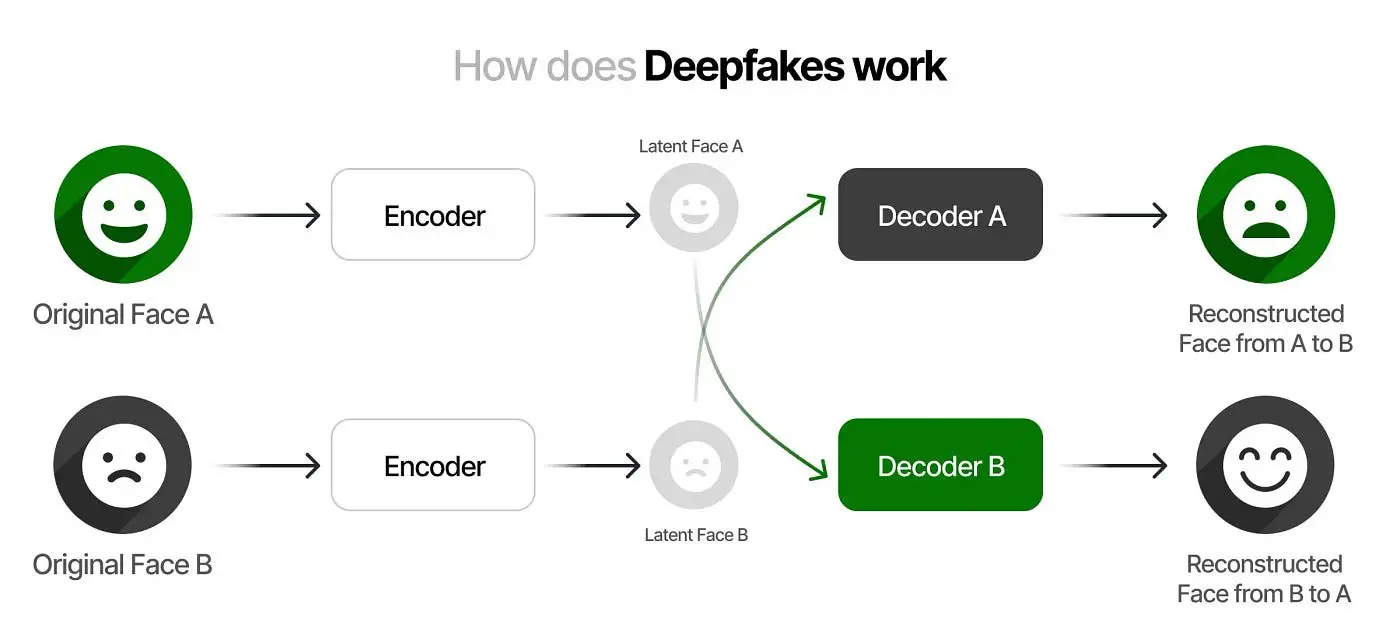

Deepfakes utilize advanced machine learning techniques, especially generative adversarial networks (GANs), to create realistic synthetic media. GANs consist of two main components:

- Generator: Produces synthetic data that mimics real media.

- Discriminator: Evaluates the synthetic data against real data and provides feedback to the generator.

Through iterative training, the generator becomes adept at producing media that is indistinguishable from authentic content. While this technology has legitimate applications, such as in film and gaming, it poses significant risks when used maliciously.

Technical Approaches to Ensuring Media Integrity

1. Forensic Analysis

AI-powered forensic analysis tools scrutinize digital media for signs of manipulation. These tools employ various techniques to detect inconsistencies:

- Pixel-Level Analysis: Detects anomalies in pixel patterns that indicate tampering.

- Lighting and Shadow Analysis: Identifies mismatches in lighting and shadows that are often present in deepfakes.

- Facial Movement Analysis: Examines inconsistencies in facial movements and expressions.

AI systems can be trained on extensive datasets containing both genuine and synthetic media, enabling them to recognize subtle artifacts introduced during the deepfake creation process.

2. Pattern Recognition and Machine Learning

Pattern recognition techniques, particularly using convolutional neural networks (CNNs), play a crucial role in detecting deepfakes. CNNs are adept at identifying irregularities in texture, colour distribution, and image quality. Advanced detection algorithms leverage the following methods:

- Spectral Analysis: Analyzes frequency components of images and videos to spot inconsistencies.

- Steganalysis: Detects hidden messages or data within media files that might indicate manipulation.

3. Temporal and Spatial Analysis

Videos are temporal sequences of images, and maintaining temporal coherence is challenging for deepfake creators. AI models can analyze the temporal and spatial coherence of videos to detect deepfakes:

- Temporal Coherence Checks: Evaluate the continuity of motion and appearance across frames.

- Spatial Analysis: Assesses spatial relationships and consistency within individual frames.

By focusing on these aspects, AI can identify disruptions that suggest manipulation, such as unnatural movements or inconsistent lip synchronization.

4. Audio-Visual Synchronization

Cross-modal analysis involves examining the synchronization between audio and visual elements. AI tools compare lip movements in videos with the corresponding audio tracks to identify mismatches:

- Phoneme-Viseme Matching: Matches phonemes (audio units) with corresponding visemes (visual lip movements).

- Speech Patterns: Analyzes the natural flow of speech and detects anomalies that indicate tampering.

These techniques are particularly effective in identifying deepfake videos where audio and visual components have been independently manipulated.

5. Blockchain Technology for Media Verification

Blockchain technology offers a decentralized and tamper-proof ledger for recording the origin and history of digital media. Integrating blockchain with AI enhances media verification:

- Immutable Records: Blockchain creates an immutable record of media files, including metadata such as creation date, source, and modifications.

- Cross-Verification: AI systems can cross-verify the integrity of media files against their blockchain records, ensuring authenticity and detecting unauthorized alterations.

Ethical Considerations and Challenges

While leveraging generative AI for media integrity offers significant benefits, it also raises ethical considerations:

- Privacy: Ensuring that AI systems respect user privacy while analyzing personal data is crucial.

- False Positives: AI systems can produce false positives, mistakenly identifying genuine media as deepfakes. This necessitates robust validation mechanisms.

- Accessibility: Advanced AI detection tools must be accessible to a wide range of users to avoid creating an uneven playing field.

Conclusion

Generative AI is a double-edged sword, creating both challenges and solutions in the realm of digital media. While deepfakes threaten to undermine media integrity and public trust, generative AI offers powerful tools to detect and combat these synthetic media. By leveraging advanced forensic analysis, pattern recognition, temporal and spatial analysis, audio-visual synchronization, and blockchain technology, AI can play a pivotal role in ensuring media integrity.

As we continue to innovate and adapt, the goal remains clear: to preserve the integrity of digital media and maintain public trust in an era of increasingly sophisticated synthetic content. Ensuring media integrity will require a multifaceted approach, combining technical advancements, ethical considerations, public awareness, and legislative measures. Together, these efforts will help us navigate the complexities of generative AI and safeguard the authenticity of our digital world.