Introduction

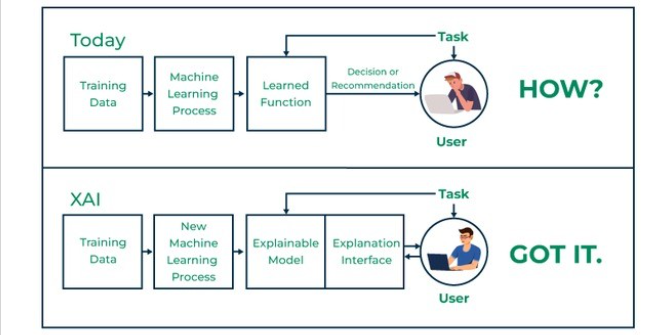

Artificial Intelligence (AI) and machine learning models, particularly large language models (LLMs) like GPT-4, have achieved remarkable capabilities in natural language understanding, generation, and translation. Despite their success, these models are often criticized for their “black-box” nature, where the decision-making processes are opaque and difficult to interpret. This lack of transparency poses significant challenges, especially in applications requiring accountability, such as healthcare, finance, and law. Explainable AI (XAI) aims to bridge this gap by developing methods to interpret and explain the decisions and outputs of AI models. This essay explores the necessity, methodologies, challenges, and future directions of XAI in the context of LLMs.

The Need for Explainability

– Trust and Accountability

In high-stakes domains, users need to trust AI systems. For example, a medical diagnosis generated by an AI model must be explainable to healthcare professionals to ensure its reliability and foster trust. Similarly, in the legal domain, AI-driven decisions impacting individuals’ lives require transparent reasoning to ensure accountability and fairness.

-Debugging and Improvement

Understanding how LLMs make decisions allows researchers and developers to identify and correct errors, biases, and limitations in the models. This understanding is crucial for iterative improvements and refining the models to perform better in diverse scenarios.

-Regulatory Compliance

With increasing scrutiny and regulatory frameworks governing AI systems, explainability is becoming a legal requirement. Regulations like the General Data Protection Regulation (GDPR) in the European Union mandate the right to explanation, where individuals affected by automated decisions can demand an explanation for those decisions.

Methods for Explainable AI in LLMs

-Model-Agnostic Methods

Model-agnostic methods do not require access to the internal workings of the model. Instead, they treat the model as a black box and analyze its inputs and outputs.

-LIME (Local Interpretable Model-agnostic Explanations):

LIME explains individual predictions by approximating the complex model locally with a simpler, interpretable model. It perturbs the input data and observes the changes in the output to identify important features influencing the decision.

-SHAP (SHapley Additive exPlanations):

SHAP leverages concepts from cooperative game theory to assign each feature an importance value for a particular prediction. It ensures consistency and provides a unified measure of feature importance.

Model-Specific Methods

Model-specific methods delve into the model’s internal mechanisms to provide explanations.

-Attention Mechanisms:

Many LLMs, including transformers, use attention mechanisms to weigh the importance of different input tokens. Visualizing attention weights can provide insights into which parts of the input the model focuses on for making predictions

-Layer-Wise Relevance Propagation (LRP):

LRP decomposes the prediction of a neural network by backpropagating the prediction score through the network layers, attributing the prediction to input features. This method can be adapted to LLMs to understand how different layers contribute to the final output.

-Post-Hoc Interpretability

Post-hoc interpretability involves creating interpretable representations of the model’s behavior after training.

-Feature Visualization:

Techniques like activation maximization generate inputs that highly activate specific neurons or layers, helping to understand what features the model has learned.

-Counterfactual Explanations:

These explanations involve modifying input data to show how changes affect the output, helping users understand the model’s decision boundaries.

Intrinsic Interpretability

Some approaches aim to design models that are inherently interpretable.

-Interpretable Models:

Models like decision trees, rule-based systems, and linear models are inherently interpretable and can sometimes be used as approximations or components of more complex models to enhance interpretability.

-Hybrid Models:

Combining interpretable models with LLMs, where the LLM provides predictions, and an interpretable model explains those predictions, can balance performance and transparency.

Challenges in XAI for LLMs

-Complexity and Scale

LLMs have billions of parameters, making it challenging to pinpoint the exact factors influencing a particular decision. The sheer size and complexity of these models make traditional interpretability methods less effective.

-Context and Ambiguity

Natural language is inherently ambiguous and context-dependent. An LLM’s interpretation might vary significantly based on subtle nuances in the input, making it difficult to provide consistent and meaningful explanations.

-Evaluation of Explanations

Assessing the quality and usefulness of explanations is non-trivial. Explanations need to be accurate, understandable, and actionable, which requires interdisciplinary collaboration to define appropriate evaluation metrics.

-Balancing Interpretability and Performance

There is often a trade-off between a model’s interpretability and its performance. Highly interpretable models might not achieve the same accuracy or generalization capabilities as more complex, less interpretable ones.

Future Directions

-Unified Frameworks

Developing unified frameworks that integrate various interpretability methods can provide a comprehensive understanding of LLMs. These frameworks should be scalable and adaptable to different models and use cases.

-Human-Centered Design

Incorporating user feedback and involving domain experts in the design of XAI methods can ensure that the explanations are relevant and useful. Human-centered design approaches can help tailor explanations to specific needs and contexts.

-Enhanced Visualization Tools

Advanced visualization tools that can handle high-dimensional data and provide interactive exploration capabilities can make it easier to interpret LLMs. These tools should allow users to drill down into specific model components and understand their contributions.

-Interdisciplinary Research

Collaboration between AI researchers, domain experts, and social scientists can foster the development of more robust and context-aware interpretability methods. Interdisciplinary research can address the multifaceted challenges of XAI and ensure that the solutions are practical and ethical.

-Benchmarking and Standardization

Creating benchmarks and standardized protocols for evaluating interpretability methods can drive progress in the field. Standardization can facilitate fair comparisons and promote the adoption of best practices.

Conclusion

Explainable AI (XAI) in large language models is a crucial area of research that addresses the transparency and accountability challenges of modern AI systems. Developing effective interpretability methods requires a combination of model-agnostic, model-specific, post-hoc, and intrinsic approaches. Despite the challenges posed by the complexity and scale of LLMs, ongoing research and interdisciplinary collaboration promise to make these models more transparent and trustworthy. As AI systems continue to integrate into critical aspects of society, the need for explainable AI will only grow, underscoring the importance of this evolving field.