Companies in the business-like Microsoft, Google, and Open AI have been engaged in a strong battle focused on artificial intelligence (AI) during the past year, which contributed to rapid progress in this revolutionary subject. These major players have been in a head-to-head competition, with each one releasing more advanced and powerful AI models than the last.

Despite not being the first to enter the AI space, Google is now able to become the industry leader with Gemini, which is reported to be the most powerful AI model ever developed. The specifics of the project are still under strict confidentiality, hence there is little official information available about Google Gemini and much of it is conjectured upon “I can hear the noise of curiosity.” Let’s explore the following questions that arise in everyone’s mind when reading this blog.

- What is Google Gemini?

- How does Google Gemini achieve the fusion of multiple AI entities into a singular model?

- What are the features of Google Gemini?

- How Gemini AI Works

- How does a multi-modal AI like Google Gemini work?

- When is it going to be released?

What is Google Gemini?

Google Gemini (GENERALIZED MULTIMODAL INTELLIGENCE NETWORK), also known as Gemini AI, represents a suite of large language models (LLMs) in development by Google AI.

Sundar Pichai, Google’s CEO, has stated that Gemini’s foundation models are inherently multimodal, allowing users to process and generate text, images, code, and audio content through a unified user interface (UI).

How can it be a fusion of Multiple AI Entities into a Singular Model ?

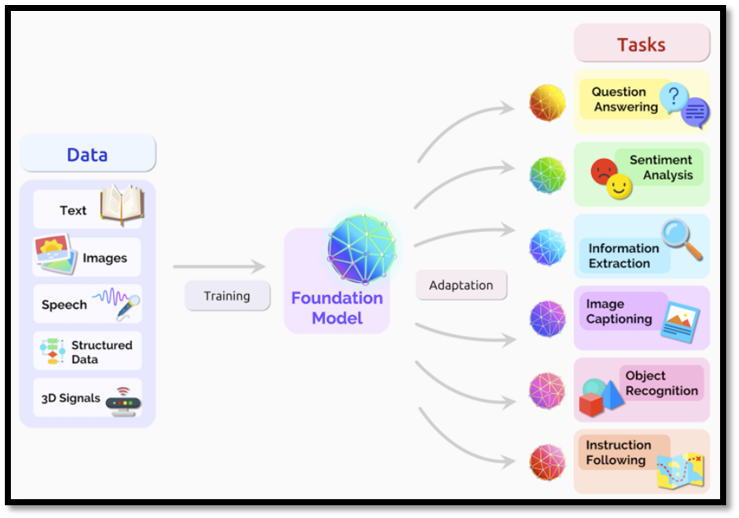

Multimodal AI can only be made elegantly and efficiently in one way. That is the fusion of several AI models into one. When creating multimodal AI, machine learning and AI models including graph processing, computer vision, audio processing, language models, coding and programming, and 3D models must be combined and coordinated to create synergy.

This is a huge, difficult undertaking, and Google hopes to develop this idea to a previously unheard-of degree.

Taking inspiration from AlphaGo

Google Gemini acknowledges its indebtedness to the groundbreaking work of Google’s DeepMind. AlphaGo, developed by DeepMind, achieved a historic milestone in 2016 by becoming the first computer program to defeat a professional human Go player.

This significant moment in AI history occurred when AlphaGo triumphed over Lee Sedol, a renowned Go player, showcasing the capabilities of artificial intelligence in mastering complex strategic games.

What are the Features of Google Gemini ?

Gecko (lightweight for mobile devices):

Gecko is designed to be lightweight, making it suitable for deployment on mobile devices. This model is optimized for tasks that require a balance between performance and resource efficiency on mobile platforms.

Otter (more powerful for various unimodal tasks)

Otter is positioned as a more powerful model compared to Gecko. Otter is versatile and intended for various unimodal tasks. Its increased power makes it suitable for handling a broader range of tasks within a specific modality.

Bison (larger and more versatile, competing with Chat GPT-4)

Bison is characterized as larger and more versatile, positioning itself in competition with Chat GPT-4. This model is designed to handle a variety of tasks, particularly in the multimodal domain, and is expected to compete with other state-of-the-art models like Chat GPT-4 for market share.

Unicorn (the largest and most powerful, suitable for a wide range of multimodal tasks)

Unicorn is described as the largest and most powerful model in the Gemini suite. Unicorn is intended for a wide range of multimodal tasks, demonstrating superior capabilities compared to the other models in handling diverse types of data, such as text, images, code, and audio content.

How Gemini AI Works

Machine learning (ML) is expected to be approached modularly via Google Pathways architecture, which Gemini is expected to utilize. A collection of modular machine learning models in this framework are first trained to carry out tasks. These modules are linked together to form a coherent network once they have been trained.

The integrated modules can work together or separately to generate a variety of outputs. In the backend, encoders convert many data formats into a common language, while decoding devices provide outputs in various modalities according to the task at hand and the encoded inputs.

Google is expected to incorporate Duet AI as the Gemini frontend. Duet AI will act as a user-friendly interface, hiding the complexity of the Gemini architecture so that people of different skill levels can use Gemini models for generative AI applications.

AI TRAINING:

Gemini LLM (Large Language Model) models are supposedly trained with a variety of methods, including:

Supervised Learning: Patterns identified from training data with labels are used to train Gemini AI modules to forecast outputs for new data. To help the model acquire and generalize from the labeled data it is given, examples with known correct outputs are supplied.

Unsupervised Learning: To train the modules to find patterns, structures, or relationships in data without the need for labeled examples, unsupervised learning is also used. This approach works well for finding hidden patterns and deciphering the data’s underlying structure.

Reinforcement Learning: Gemini AI modules go through an iterative process called reinforcement learning, which involves making improvements to their decision-making strategies through trial and error. The modules adjust their behavior according to the results of their actions, learning to maximize rewards and minimize penalties. It is specifically mentioned that Google might train Gemini modules, especially on Cloud TPU v5e chips, primarily using reinforcement learning with human feedback (RLHF).

As per the available data, Google asserts that the TPUs (Tensor Processing Units) utilized for Gemini model training possess five times the computational capacity of the chips utilized for Chat GPT training, signifying a noteworthy progress in hardware capabilities.

Although Google hasn’t made public any information regarding the datasets that were used to train Gemini AI, it’s possible that LangChain was used by Google engineers. Furthermore, there is conjecture that the training set may have been repurposed from more recent endeavors, possibly involving data from PaLM 2 training.

Beyond a simple language model

Google’s next AI architecture, called Gemini, is intended to take the place of PaLM 2 and usher in a new era of AI services. Now, Duet AI in Google Docs and the Bard chatbot are two examples of the Google AI services that are powered by PaLM 2. But by allowing the simultaneous analysis or creation of text, image, audio, video, and other data types, Gemini seeks to outperform its predecessor. Google Gemini represents a substantial improvement in AI capabilities since it is essentially a multimodal model that can handle various data types.

Gemini is a versatile AI model that brings a range of capabilities to the table:

- Video Summarization: Simply put, Google Gemini can watch a video, pick out the important bits, and give you a short and sweet summary. Imagine watching a sports match; Gemini could identify the best moments, summarize the highlights, and even provide a written summary of the game.

- Question-Answering on Videos: With Gemini’s ability to understand both what it sees and hears, you can ask it questions about a video. If you’re in a lecture and wonder about the main point, you could ask, and Gemini would analyze the audio and visuals to give you an accurate answer.

- Image Captioning: Show Gemini a picture, and it can tell you all about it. Give it a scenic landscape, and it’ll generate a caption telling you about the location, weather, and interesting features in the image.

- Text-to-Image Generation: Describe something to Google Gemini, and it can create an image based on your words. Tell it about a red sports car racing down a curvy mountain road, and it’ll paint a visual picture for you etc.

How does a multi-modal AI like Google Gemini work?

War against ChatGPT:

When comparing Gemini to ChatGPT, experts often focus on the concept of parameters in an AI system. Parameters are the variables adjusted during the training stage that the AI utilizes to transform input data into output. In general terms, a higher number of parameters in an AI system indicates greater sophistication. While ChatGPT 4.0, the most advanced AI currently in operation, boasts 1.75 trillion parameters, Gemini is reported to surpass this number significantly. Estimates suggest that Gemini may have 30 trillion or even 65 trillion parameters.

However, the potency of an AI system extends beyond just the sheer number of parameters. According to a study by Semi Analysis, Google Gemini is anticipated to outperform ChatGPT 4.0 significantly. The study suggests that by the end of 2023, Gemini could be up to five times more powerful, potentially reaching a staggering 20 times the capability of ChatGPT 4.0.

When is it going to be released?

Currently undergoing beta testing with a select group of developers at a few companies, While the official release date and final capabilities of Gemini AI remain undisclosed, Google has provided early access to a limited number of developers at select companies. This suggests that Google Gemini may be nearing readiness for release and potential integration into Google Cloud Vertex AI services by the close of 2023. In the event of a successful launch, Google aims to incorporate Gemini AI into various cloud services, including those for enterprise and consumer applications like Google Search, Google Translate, and Google Assistant. Gemini AI is anticipated to showcase scalability, coupled with flexible tools and application programming interface (API) integration capabilities. This adaptability positions Gemini AI as a viable solution for a diverse array of real-time applications on both desktop and mobile platforms.

Both most sophisticated LLMs in the field of NLP are ChatGPT and Google Gemini, which have impressive features and a wide range of potential applications. However, both have significant drawbacks and obstacles that need to be carefully considered and overcome. To what degree Google’s Gemini AI can outperform ChatGPT will depend on how well it makes use of multimodal benefits, how well it negotiates multimodal obstacles, and how well it weighs trade-offs. In the end, the competition between ChatGPT and Google Gemini may not be a zero-sum game but rather a positive dynamic that promotes creativity and progress in the AI industry.