In the rapidly evolving field of artificial intelligence, Generative Adversarial Networks (GANs) have emerged as a revolutionary tool for generating synthetic data. This innovation not only addresses the need for large, diverse datasets but also mitigates privacy concerns associated with real-world data. In this blog, we will delve into how GANs work, their applications in synthetic data generation, and their impact on enhancing data diversity and privacy.

Understanding GANs

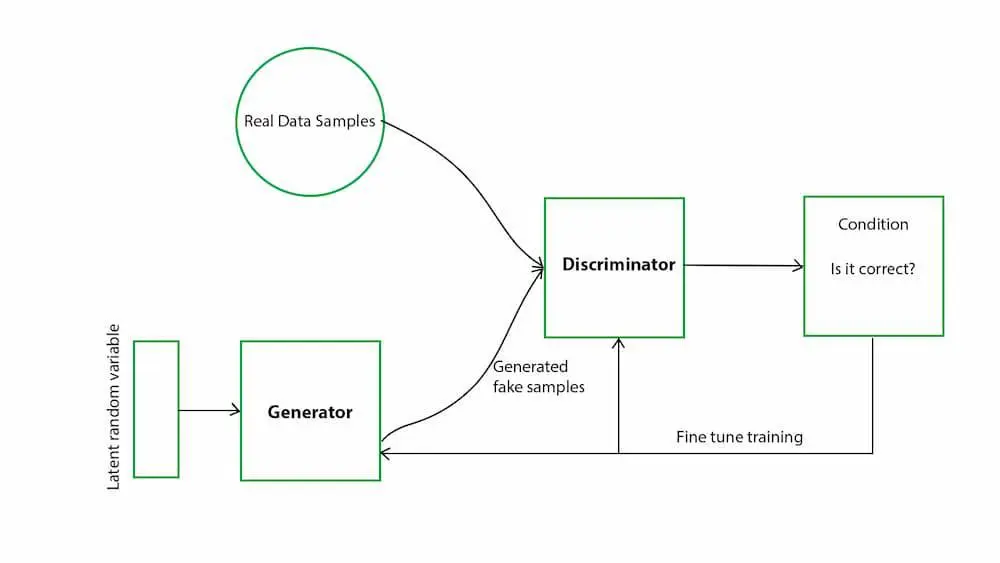

Generative Adversarial Networks, introduced by Ian Goodfellow and his colleagues in 2014, consist of two neural networks the generator and the discriminator—that are trained simultaneously through a process known as adversarial training. The generator creates synthetic data, while the discriminator evaluates the authenticity of the data, distinguishing between real and generated samples.

GAN Architecture

- Generator: Takes random noise as input and generates synthetic data.

- Discriminator: Evaluates the data and determines whether it is real or fake.

The training process involves a minimax game where the generator aims to produce realistic data to fool the discriminator, while the discriminator strives to accurately identify fake data. This adversarial process continues until the generator produces data that is indistinguishable from real data.

Applications of GANs in Synthetic Data Generation

GANs have revolutionized various domains by generating high-quality synthetic data. Here are some notable applications:

- Healthcare: GANs generate synthetic medical images, aiding in training models without compromising patient privacy. For example, synthetic MRI or CT scans can be used for developing diagnostic models.

- Finance: Synthetic financial data helps in risk modeling and fraud detection without exposing sensitive information. GANs can generate transaction data that mimic real-world patterns.

- Autonomous Vehicles: GANs create diverse driving scenarios for training self-driving cars, enhancing their ability to handle real-world situations. Synthetic images of road conditions, pedestrians, and traffic can significantly improve the robustness of autonomous systems.

- Retail: GANs can generate synthetic customer purchase data, aiding in inventory management, personalized marketing, and sales forecasting without compromising individual privacy.

Enhancing Data Diversity

One of the primary advantages of using GANs is their ability to enhance data diversity. Diverse datasets are crucial for training robust machine learning models that generalize well to unseen data. GANs achieve this by generating a wide range of synthetic samples that cover various aspects of the data distribution.

Code Snippet: Generating Diverse Images Using GANs

Below is a more comprehensive implementation using TensorFlow and Keras to create a simple GAN for generating synthetic images:

import tensorflow as tf

from tensorflow.keras.layers import Dense, Reshape, Flatten, Conv2D, Conv2DTranspose, LeakyReLU, BatchNormalization

from tensorflow.keras.models import Sequential

from tensorflow.keras.optimizers import Adam

import numpy as np

import matplotlib.pyplot as plt# Define the generator model

def build_generator(latent_dim):

model = Sequential([

Dense(256 * 7 * 7, activation=’relu’, input_dim=latent_dim),

Reshape((7, 7, 256)),

BatchNormalization(),

Conv2DTranspose(128, kernel_size=4, strides=2, padding=’same’, activation=’relu’),

BatchNormalization(),

Conv2DTranspose(64, kernel_size=4, strides=2, padding=’same’, activation=’relu’),

BatchNormalization(),

Conv2DTranspose(1, kernel_size=7, activation=’tanh’, padding=’same’)

])

return model# Define the discriminator model

def build_discriminator(img_shape):

model = Sequential([

Conv2D(64, kernel_size=4, strides=2, padding=’same’, input_shape=img_shape),

LeakyReLU(alpha=0.2),

Conv2D(128, kernel_size=4, strides=2, padding=’same’),

LeakyReLU(alpha=0.2),

Flatten(),

Dense(1, activation=’sigmoid’)

])

return model

# Build and compile the GAN model

def build_gan(generator, discriminator):

discriminator.compile(loss=’binary_crossentropy’, optimizer=Adam(), metrics=[‘accuracy’])

discriminator.trainable = False

gan_input = tf.keras.Input(shape=(latent_dim,))

generated_img = generator(gan_input)

gan_output = discriminator(generated_img)

gan = tf.keras.Model(gan_input, gan_output)

gan.compile(loss=’binary_crossentropy’, optimizer=Adam())

return gan# Training the GAN

def train_gan(gan, generator, discriminator, epochs, batch_size, latent_dim, img_shape):

(x_train, _), (_, _) = tf.keras.datasets.mnist.load_data()

x_train = (x_train – 127.5) / 127.5 # Normalize the images to [-1, 1]

x_train = np.expand_dims(x_train, axis=-1) # Expand dimensions to include the channel

real_labels = np.ones((batch_size, 1))

fake_labels = np.zeros((batch_size, 1))

for epoch in range(epochs):

idx = np.random.randint(0, x_train.shape[0], batch_size)

real_images = x_train[idx]

noise = np.random.normal(0, 1, (batch_size, latent_dim))

fake_images = generator.predict(noise)

d_loss_real = discriminator.train_on_batch(real_images, real_labels)

d_loss_fake = discriminator.train_on_batch(fake_images, fake_labels)

d_loss = 0.5 * np.add(d_loss_real, d_loss_fake)

noise = np.random.normal(0, 1, (batch_size, latent_dim))

g_loss = gan.train_on_batch(noise, real_labels)

if epoch % 100 == 0:

print(f”{epoch}/{epochs} [D loss: {d_loss[0]}, acc.: {100*d_loss[1]}%] [G loss: {g_loss}]”)

sample_images(generator, epoch, latent_dim)# Generate and save sample images

def sample_images(generator, epoch, latent_dim, examples=10, dim=(1, 10), figsize=(10, 1)):

noise = np.random.normal(0, 1, (examples, latent_dim))

generated_images = generator.predict(noise)

generated_images = 0.5 * generated_images + 0.5 # Rescale images to [0, 1]

plt.figure(figsize=figsize)

for i in range(examples):

plt.subplot(dim[0], dim[1], i+1)

plt.imshow(generated_images[i, :, :, 0], cmap=’gray’)

plt.axis(‘off’)

plt.tight_layout()

plt.savefig(f’gan_generated_image_epoch_{epoch}.png’)

plt.close()# Set parameters

latent_dim = 100

img_shape = (28, 28, 1)

epochs = 10000

batch_size = 64# Build and compile the GAN

generator = build_generator(latent_dim)

discriminator = build_discriminator(img_shape)

gan = build_gan(generator, discriminator)

# Train the GAN

train_gan(gan, generator, discriminator, epochs, batch_size, latent_dim, img_shape)

This comprehensive implementation involves defining the generator and discriminator models, compiling the GAN, and training it on the MNIST dataset. The train_gan function includes training both the discriminator and generator in an adversarial setup and periodically generating sample images.

Addressing Privacy Concerns

Privacy concerns are paramount when dealing with sensitive data, especially in fields like healthcare and finance. GANs offer a solution by generating synthetic data that retains the statistical properties of real data without exposing individual data points.

Workflow: Using GANs for Privacy-Preserving Data Generation

- Data Collection: Collect real-world data while ensuring compliance with privacy regulations.

- Model Training: Train the GAN on the collected data to learn its distribution.

- Synthetic Data Generation: Use the trained generator to produce synthetic data that mimics the real data.

- Model Validation: Validate the synthetic data to ensure it preserves the necessary characteristics of the real data.

This workflow ensures that the generated synthetic data can be used for training machine learning models without compromising the privacy of individuals in the original dataset.

Real-World Implementation: A Case Study

Consider a healthcare scenario where a hospital wants to develop a machine learning model to detect anomalies in medical images. However, sharing patient data is restricted due to privacy concerns. By using GANs, the hospital can generate synthetic medical images that retain the essential features of real images. These synthetic images can then be used to train and validate the anomaly detection model, ensuring both model performance and patient privacy.

Solution Flow

- Data Preprocessing: Prepare and preprocess the real medical images.

- GAN Training: Train the GAN using the preprocessed images.

- Synthetic Image Generation: Generate synthetic medical images using the trained generator.

- Model Training and Validation: Train the anomaly detection model using the synthetic images and validate its performance.

Future Prospects of GANs in Synthetic Data Generation

The future of GANs in synthetic data generation is promising, with ongoing research focused on improving the quality and diversity of generated data. Innovations such as StyleGAN and BigGAN have already demonstrated significant advancements in generating high-resolution and diverse images. Moreover, integrating GANs with other AI techniques could further enhance their capabilities, opening up new possibilities in various fields.

Generative Adversarial Networks have proven to be a game-changer in synthetic data generation, addressing critical issues of data diversity and privacy. By generating high-quality synthetic data, GANs enable the development of robust machine learning models while safeguarding sensitive information. As research and development in this field continue to advance, the potential applications of GANs are bound to expand, driving further innovation in artificial intelligence.