In this article, we will explore the concept of hierarchical multi-agent workflows. We will discuss how to create a supervisor agent that can manage a team of worker agents and route tasks between them. We will also show how to create worker agents that can perform specific tasks, such as web research and code execution. Finally, we will build a multi-agent workflow that can be used to complete complex tasks.

This approach can be beneficial for a variety of tasks, such as customer service, data analysis, and software development. By using a hierarchical multi-agent workflow, we can break down complex tasks into smaller, more manageable subtasks. This can improve the efficiency and accuracy of our work.

Hierarchical Manner of Working

But, most of us are used to working in a hierarchical manner. We have a manager that gives us the tasks; we follow their orders and submit the tasks back to them, and the manager decides on the next task for us. This helps in managing large tasks by sub-dividing the tasks and having a manager supervise the tasks amongst its subordinates.

Supervisor AI Agent

Similarly, we can create a supervisor AI agent under which we can have multiple subordinate AI agents who will follow the orders of the supervisors. The subordinate AI agents act like tools that can be executed by the supervisor AI agent.

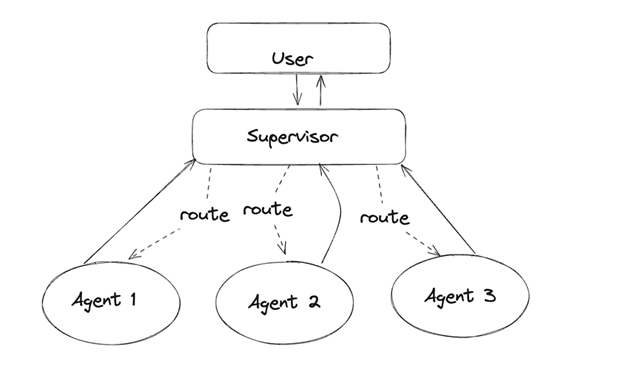

This concept is illustrated in the diagram below.

The user interacts with the supervisor AI agent who has a team of AI agents at their disposition. The supervisor can route a message to any of the AI agents under its supervision who will do the task and communicate back to the supervisor. The supervisor will choose to route the task back to another agent, and finally when the task is complete, the output will be communicated back to the user.

Let’s create the multi-agent workflow with hierarchical agents. First, we create the llm.

LLM

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(api_key=”Your OpenAI API key here”)

Tools

Next, let us create the tools and the associated libraries for them. We will use the Tavily Search tool and the Python REPL tool.

import os

os.environ[‘TAVILY_API_KEY’] = “Your Tavily API Key here”

from typing import Annotated,List,Tuple,Union

from langchain_community.tools.tavily_search import TavilySearchResults

from langchain_core.tools import tool

from langchain_experimental.tools import PythonREPLTool

tavily_tool = TavilySearchResults(max_results = 5)

python_repl_tool = PythonREPLTool()

Worker Agents

Now, let’s think about creating worker agents. One way is to write a function that can create a worker agent and provide it with a list of tools as needed.

The function will use the AgentExecutor class to to execute the creation of an agent.

from langchain.agents import AgentExecutor, create_openai_tools_agent

from langchain_core.messages import BaseMessage, HumanMessage

from langchain_openai import ChatOpenAI

def create_agent(llm: ChatOpenAI, tools: list, system_prompt: str):

# Each worker node will be given a name and some tools.

prompt = ChatPromptTemplate.from_messages(

[

(

“system”,

system_prompt,

),

MessagesPlaceholder(variable_name=”messages”),

MessagesPlaceholder(variable_name=”agent_scratchpad”),

]

)

agent = create_openai_tools_agent(llm, tools, prompt)

executor = AgentExecutor(agent=agent, tools=tools)

return executor

Supervisor Agent

Next, we create the Supervisor Agent. The Supervisor agent has to decide the agent who will process the next task, and also decide when the task is completed. It will get the messages from the user or the agent as Human Messages and decide on the agent who will act next, or finish the task.

from langchain.output_parsers.openai_functions import JsonOutputFunctionsParser

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

members = [“Researcher”, “Coder”]

system_prompt = (

“You are a supervisor tasked with managing a conversation between the”

” following workers: {members}. Given the following user request,”

” respond with the worker to act next. Each worker will perform a”

” task and respond with their results and status. When finished,”

” respond with FINISH.”

)

# Our team supervisor is an LLM node. It just picks the next agent to process

# and decides when the work is completed

options = [“FINISH”] + members

# Using openai function calling can make output parsing easier for us

function_def = {

“name”: “route”,

“description”: “Select the next role.”,

“parameters”: {

“title”: “routeSchema”,

“type”: “object”,

“properties”: {

“next”: {

“title”: “Next”,

“anyOf”: [

{“enum”: options},

],

}

},

“required”: [“next”],

},

}

prompt = ChatPromptTemplate.from_messages(

[

(“system”, system_prompt),

MessagesPlaceholder(variable_name=”messages”),

(

“system”,

“Given the conversation above, who should act next?”

” Or should we FINISH? Select one of: {options}”,

),

]

).partial(options=str(options), members=”, “.join(members))

We will create a supervisor chain that will run the prompt with the llm and output the response.

supervisor_chain = (

prompt

| llm.bind_functions(functions=[function_def], function_call=”route”)

| JsonOutputFunctionsParser()

)

For each of the agents, we will have a node function that will convert the agent response to a Human Message. This is the trick that is used to make LLMs respond as if it is a user input, rather than coming from the LLM itself.

def agent_node(state, agent, name):

result = agent.invoke(state)

return {“messages”: [HumanMessage(content=result[“output”], name=name)]}

Building The Graph

Now, we have the Supervisor Agent, the function for creating worker agents, and a node function to convert an agent response into a human message, it is time now to create the worker agents and supervisor agents and build the graph by connecting them.

Agent State

First, we create an object for the AgentState which will be the list of the messages and the next agent to route to.

import operator

from typing import Annotated, Any, Dict, List, Optional, Sequence, TypedDict

import functools

from langchain_core.prompts import ChatPromptTemplate, MessagesPlaceholder

from langgraph.graph import StateGraph, END

# The agent state is the input to each node in the graph

class AgentState(TypedDict):

# The annotation tells the graph that new messages will always

# be added to the current states

messages: Annotated[Sequence[BaseMessage], operator.add]

# The ‘next’ field indicates where to route to next

next: str

Worker Agent — Researcher

Now, we create the worker agents using the create_agent function. First, we create a worker agent which will be a researcher using the Tavily search tool.

research_agent = create_agent(llm, [tavily_tool], “You are a web researcher.”)

research_node = functools.partial(agent_node, agent=research_agent, name=”Researcher”)

Worker Agent — Coder

Next, we create another worker agent which will be a coder using the PythonREPL tool.

code_agent = create_agent(

llm,

[python_repl_tool],

“You may generate safe python code to analyze data and generate charts using matplotlib.”,

)

code_node = functools.partial(agent_node, agent=code_agent, name=”Coder”)

Finally, we build the graph connecting the nodes using the add_node function.

workflow = StateGraph(AgentState)

workflow.add_node(“Researcher”, research_node)

workflow.add_node(“Coder”, code_node)

workflow.add_node(“supervisor”, supervisor_chain)

And, then, we create all the edges in the graph using the add_edge function.

for member in members:

# We want our workers to ALWAYS “report back” to the supervisor when done

workflow.add_edge(member, “supervisor”)

# The supervisor populates the “next” field in the graph state

# which routes to a node or finishes

conditional_map = {k: k for k in members}

conditional_map[“FINISH”] = END

workflow.add_conditional_edges(“supervisor”, lambda x: x[“next”], conditional_map)

We set the entry point for the multi-agent workflow as the Supervisor which will be given the user input. And, then we compile the graph.

# Finally, add entrypoint

workflow.set_entry_point(“supervisor”)

graph = workflow.compile()

Invoking the Team

We are now ready to invoke the AI agents team and get it to complete our tasks.

Let’s try out two simple tasks.

Task 1: Print “Hello, World!”

Task 2: Write a research report on Himalayan Pandas.

Task 1: Print “Hello, World!”

for s in graph.stream(

{

“messages”: [

HumanMessage(content=”Code hello world and print it to the terminal”)

]

}

):

if “__end__” not in s:

print(s)

print(“—-“)

The output is:

{‘supervisor’: {‘next’: ‘Coder’}}

—-

Python REPL can execute arbitrary code. Use with caution.

{‘Coder’: {‘messages’: [HumanMessage(content=’The code “Hello, World!” has been printed to the terminal.’, name=’Coder’)]}}

—-

{‘supervisor’: {‘next’: ‘FINISH’}}

—-

Task 2: Write a research report on Himalayan Pandas.

for s in graph.stream(

{“messages”: [HumanMessage(content=”Write a brief research report on pikas.”)]},

{“recursion_limit”: 100},

):

if “__end__” not in s:

print(s)

print(“—-“)

The output is:

{‘supervisor’: {‘next’: ‘Researcher’}}

—-

{‘Researcher’: {‘messages’: [HumanMessage(content=”## Research Report on Himalayan Pandas\n\n### Introduction\nHimalayan Pandas, also known as Red Pandas, are fascinating and adorable animals that inhabit the mountainous regions of the Himalayas. They are unique creatures with distinct features and behaviors that make them a subject of interest and concern for conservation efforts. This research report delves into the characteristics, habitat, behavior, and conservation status of Himalayan Pandas based on information gathered from various sources.\n\n### Characteristics and Habitat\nHimalayan Pandas are comprised of two subspecies – the Himalayan Red Panda (Ailurus fulgens fulgens) and the Chinese Red Panda (A. fulgens styani). The Himalayan Red Panda resides in the mountains of northern India, Tibet, Bhutan, and Nepal, while the Chinese Red Panda is found in China’s Sichuan and Yunnan provinces. These pandas are known for their reddish-brown coats, kitten-like faces, and impressive acrobatic skills in climbing and swinging on trees in their Asian forest habitats. The Himalayan Red Panda has whiter faces compared to the Chinese Red Panda, which has redder fur and striped tail rings.\n\n### Behavior\nRed Pandas, including the Himalayan Pandas, are solitary animals but display communication through tail arching, head bobbing, squealing, and unique sounds like a “huff-quack.” They are agile climbers and spend much of their time in trees, where their red fur blends with the moss clumps on their tree homes, providing camouflage against predators like snow leopards and jackals.\n\n### Conservation Status\nThe International Union for the Conservation of Nature (IUCN) considers Red Pandas, including the Himalayan Pandas, as endangered species. The Himalayan Red Panda, in particular, faces threats due to low genetic diversity and a small population size. Habitat destruction, fragmentation, deforestation, illegal activities, and human disturbances are major factors contributing to the decline in their numbers. Conservation efforts are crucial to protect these unique and charming creatures from extinction.\n\n### Conclusion\nHimalayan Pandas, with their striking appearance and intriguing behaviors, are a valuable part of the ecosystem in the Himalayan region. It is essential to raise awareness about their conservation needs and take action to safeguard their habitats and well-being. By understanding and appreciating these remarkable animals, we can contribute to their preservation and ensure a sustainable future for the Himalayan Pandas and their natural environment.”, name=’Researcher’)]}}

—-

{‘supervisor’: {‘next’: ‘FINISH’}}

—-

Congratulations! You have created a multi-agent workflow that consists of a supervisor that can supervise the work of multiple agents and return the work to the user.