Meta-learning, often dubbed as “learning to learn,” is an exciting and rapidly growing area in machine learning. It focuses on developing algorithms that can learn new tasks quickly with minimal data by leveraging prior knowledge gained from related tasks. This approach is inspired by the human ability to apply past experiences to new and different situations, making it a powerful tool for building adaptable and efficient ML models.

What is Meta-Learning?

Meta-learning seeks to optimize the process of learning itself. Instead of training a model from scratch for each new task, meta-learning aims to train a meta-learner that can quickly adapt to new tasks with few examples. This is particularly valuable in situations where data is scarce or expensive to obtain.

In traditional machine learning, a model is trained on a dataset and evaluated on its performance on unseen data. In contrast, meta-learning involves a two-level learning process:

- Base-Level Learning: The model (or learner) learns to perform specific tasks. This stage involves standard supervised, unsupervised, or reinforcement learning methods.

- Meta-Level Learning: The meta-learner learns how to learn these tasks more effectively. It optimizes the learning algorithm itself, often across a distribution of tasks.

Why Meta-Learning is Significant

Meta-learning’s ability to leverage previous knowledge makes it particularly useful in several scenarios:

- Few-Shot Learning: Meta-learning can excel in few-shot learning, where the goal is to train models that can learn new concepts from a very small number of examples. This is crucial in domains like medical diagnostics, where labeled data can be scarce.

- Task Adaptability: Meta-learning models can adapt quickly to new tasks, reducing the need for extensive retraining. This adaptability is valuable in dynamic environments where the underlying data distribution may change frequently.

- Efficient Transfer Learning: Meta-learning provides a framework for efficient transfer learning, allowing models to transfer knowledge from one task to another, even if the tasks are not closely related.

Key Algorithms in Meta-Learning

Two prominent algorithms in meta-learning are Model-Agnostic Meta-Learning (MAML) and Reptile. Let’s explore these in more detail:

1. Model-Agnostic Meta-Learning (MAML)

MAML, proposed by Chelsea Finn et al., is a meta-learning algorithm that focuses on optimizing the model’s parameters such that it can adapt quickly to new tasks. MAML is model-agnostic, meaning it can be applied to any learning algorithm, making it a versatile tool.

The key idea behind MAML is to train the model’s initial parameters so that only a few gradient steps are needed to fine-tune the model for a new task. Here’s a simplified version of how MAML works:

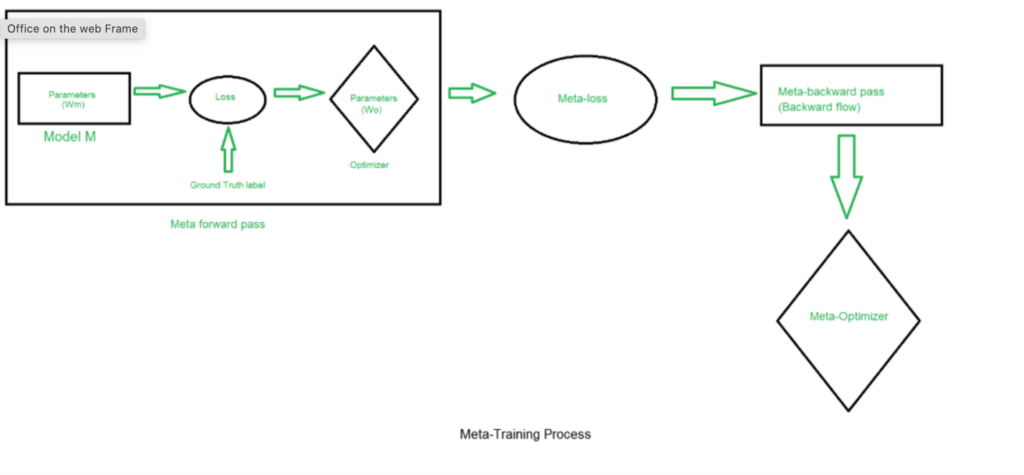

- Meta-Training: During meta-training, the model is trained on multiple tasks. For each task, the model’s parameters are updated to minimize the loss for that task. Afterward, the meta-learner updates the initial parameters based on the task-specific updates.

- Meta-Testing: In meta-testing, the model is tested on new tasks. The model starts with the meta-learned initial parameters and adapts quickly using a few gradient steps.

MAML has been shown to perform well in few-shot learning scenarios and has been widely used in various applications, including reinforcement learning and robotics.

2. Reptile

Reptile, introduced by Alex Nichol et al., is another meta-learning algorithm that shares similarities with MAML but has some key differences. Reptile is simpler to implement and computationally more efficient.

The Reptile algorithm operates as follows:

- Task Sampling: The algorithm samples a task and trains the model on this task for a few iterations.

- Meta-Update: Instead of using second-order gradients like MAML, Reptile updates the initial parameters by moving them towards the task-specific parameters obtained after training.

Reptile’s simplicity and efficiency make it an attractive choice for many meta-learning applications. It also enjoys the benefit of being model-agnostic, applicable to various learning scenarios.

Future Directions in Meta-Learning

Meta-Learning for Continual Learning

Continual learning, where a model learns from a continuous stream of tasks without forgetting previous ones, is a promising area for applying meta-learning. Meta-learning algorithms could help in quickly adapting to new tasks while retaining knowledge from past tasks, thus addressing the challenge of catastrophic forgetting.

Meta-Reinforcement Learning

Meta-reinforcement learning combines meta-learning with reinforcement learning (RL) to enable agents to adapt to new environments with minimal additional training. This is particularly useful in scenarios where the environment may change over time, requiring the agent to adapt its behavior accordingly.

Neural Architecture Search (NAS) with Meta-Learning

Meta-learning can be leveraged for neural architecture search, where the goal is to automatically discover optimal neural network architectures for a given task. By learning to optimize architectures across different tasks, meta-learning can significantly reduce the computational cost and time required for NAS.

Interpretable Meta-Learning

As with other areas of ML, interpretability is becoming increasingly important in meta-learning. Developing methods that allow for understanding and explaining the decisions made by meta-learners can enhance trust and transparency, particularly in critical applications like healthcare and finance.

Conclusion

Meta-learning represents a significant advancement in the field of machine learning, enabling models to learn more efficiently and adapt to new tasks with minimal data. Algorithms like MAML and Reptile exemplify the power of meta-learning in building adaptable ML systems.

As research in this area continues to grow, we can expect even more innovative approaches and applications. Whether it’s improving few-shot learning, enhancing task adaptability, or developing more efficient transfer learning techniques, meta-learning is poised to play a crucial role in the future of AI and machine learning.