What is LLM?

A large language model is a kind of artificial intelligence algorithm that uses self-supervised learning techniques to process and comprehend text or human languages utilizing neural network techniques with many parameters. Applications of the Large Language Model include text production, machine translation, chatbots, machine coding, summary writing, image generation from texts, and conversational artificial intelligence. Open AI’s Chat GPT and Google’s BERT (Bidirectional Encoder Representations from Transformers) are two examples of these LLM models.

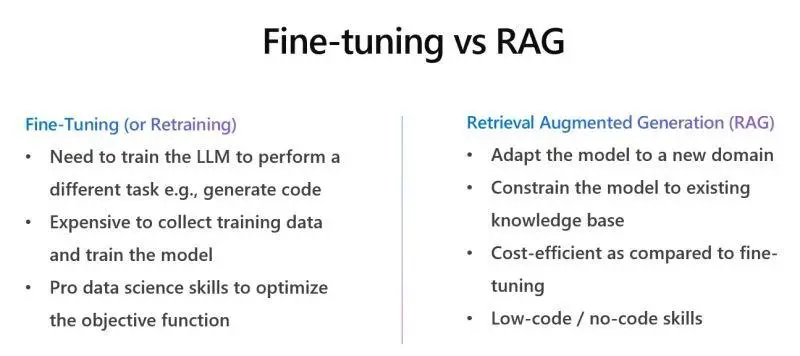

With the growing interest in Large Language Models (LLMs), numerous developers and organizations are working hard to create applications that fully utilize their potential. Nevertheless, the topic of how to enhance the LLM application’s performance arises when the pre-trained LLMs out of the box don’t perform as anticipated or intended. Eventually, we reach a point where we ask ourselves, “Is there any way we can improve the results? Should we use RAG, or should we fine-tune the model?”

What is Retrieval-Augmented Generation?

The technique of optimizing the output of a large language model is called Retrieval-Augmented Generation (RAG), whereby the model consults a reliable knowledge base apart from its training data sources prior to producing a response. Large Language Models (LLMs) produce unique output for tasks like question answering, language translation, and sentence completion by using billions of parameters and enormous amounts of data during training. Without requiring the model to be retrained, RAG expands the already potent capabilities of LLMs to domains or the internal knowledge base of an organization. It is an affordable way to enhance LLM output so that it continues to be accurate, relevant, and helpful in a variety of settings.

How does Retrieval-Augmented Generation work?

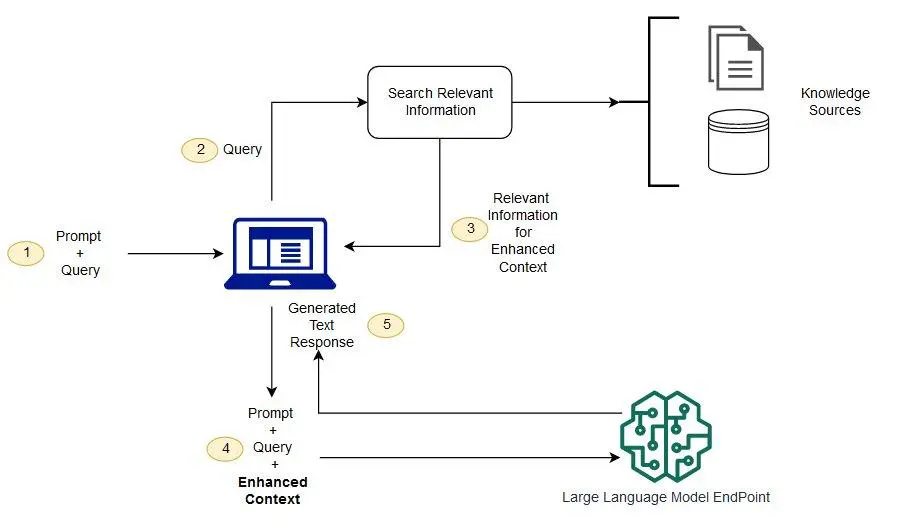

Without RAG, the LLM processes user input and generates a response based on pre-existing knowledge or data from its training set. RAG introduces an information retrieval component that first pulls data from a new data source using user input. The LLM receives both the user query and the pertinent data. To provide improved replies, the LLM takes advantage of both its training data and the new knowledge. An outline of the procedure is given in the ensuing sections.

- Create external data

External data is fresh information that was not included in the LLM’s initial training set. It may originate from a variety of data sources, including databases, document repositories, and APIs. The information could be in the form of files, database entries, or lengthy text, among other formats. Data is transformed into numerical representations using an additional AI technique called embedding language models, which then saves the data in a vector database. A knowledge library that the generative AI models can comprehend is produced by this approach.

- Retrieve relevant information

Doing a relevance search is the next step. The vector representation of the user query is created, and it is then compared with the vector databases. Think of a clever chatbot that can respond to inquiries from a company’s human resources department, for instance. The system will provide annual leave policy materials and the specific employee’s previous leave history if an employee queries, “How much annual leave do I have?” Since these particular documents are so closely related to the employee’s contribution, they will be returned. Mathematical vector calculations and representations were used to determine and calculate the relevancy.

- Augment the LLM prompt

Next, by including the pertinent retrieved data in context, the RAG model enhances the user input (or prompts). Prompt engineering approaches are used in this step to effectively communicate with the LLM. The huge language models are able to produce precise responses to user inquiries thanks to the augmented prompt.

- Update external data

What if the external data goes out of date? might be the next query to ask. Asynchronously update the documents and their embedding representation in order to preserve up-to-date information for retrieval. Either recurring batch processing or automated real-time processes can be used for this. This is a typical data analytics challenge: there are various data-science methods for handling change.

The conceptual flow of employing RAG with LLMs is depicted in the following diagram.

What is fine-tuning?

A pre-trained language model’s weights are updated on a new task and dataset during fine-tuning. The main difference between training and fine-tuning is that the former begins with a model that has been randomly initialized and is specifically tailored to a given task and dataset. Fine-tuning, on the other hand, improves upon a pre-trained model and adjusts its weights to attain superior performance.

As an example, consider the task of conducting a sentiment analysis on movie reviews. Consider using a pre-trained language model, like GPT-3, which has previously been trained on a sizable corpus of text, as opposed to starting from scratch when building a new model. You would utilize a smaller dataset of movie reviews to fine-tune the model for the particular purpose of sentiment analysis. In this manner, sentiment analysis may be taught into the model.

Fine-tuning has several advantages over beginning from scratch, such as a faster training time and the ability to get state-of-the-art results with less data. In the sections that follow, we will examine fine-tuning in more detail. Let’s examine the many fine-tuning instances first, though.

When Do You Need to Fine-Tune?

Following are some situations where fine-tuning may be necessary:

Transfer learning

When you wish to apply previously learned language model knowledge to a different task or topic. To sort a smaller dataset of scientific papers by topic, for example, you may refine a model that has already been pre-trained on a big corpus of new things.

Data security and compliance

Optimizing language models is critical to ensuring data security and compliance since it allows them to quickly adjust to changing rules, fortify defenses against new threats, and streamline compliance auditing procedures with specialized analytic tools.for example, modifying a model in reaction to new methods of data breaches in order to fortify an organization’s defenses and guarantee compliance to new data protection regulations.

Customization

Conform to changing regulations and evolving threats. For example, you may adjust a model to strengthen an organization’s defenses and guarantee compliance with updated data protection standards when a new technique of data breach emerges.

Domain-specific task

When you have a specific assignment that calls for industry or subject expertise. For example, you can use a dataset of legal papers to improve the accuracy of a pre-trained model if you are working on a task that requires the analysis of legal documents.

Limited labeled data

Making tiny adjustments to a pre-trained language model can enhance its effectiveness for your specific purpose if you have limited labeled data. Let’s say you are creating a chatbot that needs to be able to understand client inquiries. You can improve the capabilities of a pre-trained language model such as GPT-3 by fine-tuning it with a small dataset of labeled customer inquiries.

Fine-tuning a pre-trained model might be less efficient than starting from scratch when training a language model if you have a large dataset and are working on a novel job or domain.

Training a language model from scratch or refining an existing one depends on the particular use case and dataset. Before deciding, it is important to carefully consider the advantages and disadvantages of both techniques.

Benefits of fine-tuning LLM applications:

Enhanced performance: By customizing pre-trained models to certain tasks or domains, fine-tuning LLMs can enhance performance.

Effective use of resources: Optimizing LLMs uses fewer resources than building a language model from the ground up. It starts with a pre-trained model and uses the knowledge that the model has already gathered, saving time, computing resources, and the need for training data.

New data adaptation: By fine-tuning LLMs, the model can adjust to fresh data or modify the underlying data distribution. During the fine-tuning process, the model is exposed to task-specific examples, which help it learn to manage any fluctuations, trends, or biases in the particular dataset.

Task customization: By fine-tuning LLMs, one can adapt the model’s architecture, loss functions, and training aim to suit certain tasks or goals. Because of its adaptability, task-specific optimization can be achieved, improving the intended application’s outcomes.

Uses cases where fine-tuning LLM is best:

- Material creation tasks: By fine-tuning LLMs, one can enhance one’s ability to produce material tailored to a certain domain or use case.

- Customer support chatbots: By tailoring the model to certain nuances, tones, or terminologies, fine-tuning LLMs can improve the accuracy of LLM-generated responses in customer care chatbots.

- Domain-specific databases: By integrating crucial information from domain-specific databases, fine-tuned LLMs can produce more accurate replies.

- Medical applications: Applying domain-specific fine-tuning to LLMs can enhance their performance in certain applications.

- Instruction tuning: LLM performance in instruction tuning can be enhanced by fine-tuning LLMs.

We are unable to vote for RAG or fine-tuning because selecting one will mean ignoring the other. RAG and fine-tuning are two efficient methods of enhancing LLM performance in accordance with your business needs.

In my opinion, RAG is better suited for activities where the program requires access to current data to provide accurate answers. It is also a suitable option for tasks where obtaining tagged data is costly and rare. RAG prioritizes precision more highly, making it the best option for tasks when precision cannot be compromised.

However, fine-tuning will benefit apps that perform activities involving intricate patterns and relationships.