Large Language Models (LLMs) have revolutionized Natural Language Processing (NLP) with their exceptional abilities but face hurdles due to their size and computational demands. Model compression emerges as a transformative solution to make these models accessible and environmentally friendly.

Unveiling Model Compression Techniques

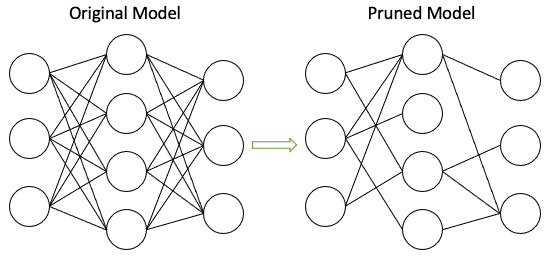

Pruning

Pruning, akin to a gardener trimming dead branches, refines LLMs by eliminating unnecessary connections between neurons, enhancing efficiency without performance compromise.

- Unstructured Pruning: Removes specific parameters or elements within the model, resulting in sparse structures. Recent innovations like SparseGPT minimize retraining needs, achieving significant sparsity.

- Structured Pruning: Targets entire structural components, preserving the overall structure. Advances focus on preserving individual element uniqueness to refine pruning techniques.

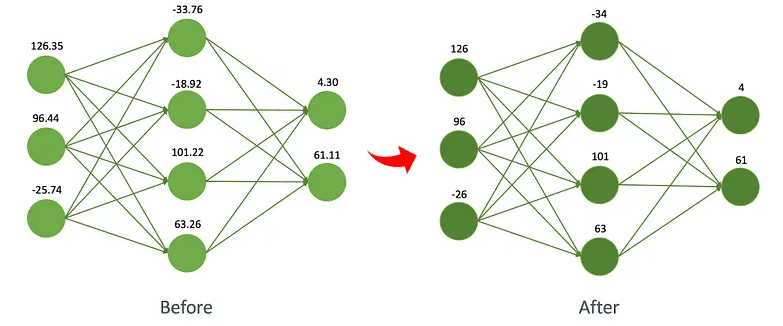

Quantization

Quantization simplifies language representation by conveying meaning using fewer bits, reducing storage and complexity without significant accuracy loss.

- Quantization-Aware Training (QAT): Integrates quantization during training, adapting the model to lower-precision representations. Techniques like LLM-QAT and PEQA facilitate model compression and accelerate inference.

- Post-Training Quantization (PTQ): Quantizes parameters post-training, enhancing computational efficiency without altering the model architecture. Methods like LUT-GEMM optimize matrix multiplications for efficiency.

Precision Methods

Quantization approaches vary in precision:

- 8-bit quantization: Optimizes computations with 8-bit precision, significantly reducing memory usage while maintaining performance precision.

- Lower-bit quantization: Techniques aim for ultra-low precisions (3-4 bits), employing strategies like outlier suppression and activation-aware approaches for accurate compression.

Knowledge Distillation

Knowledge Distillation (KD) plays a vital role in compressing Large Language Models (LLMs) by transferring insights from complex teacher models to simpler student models. KD methods in LLM compression can be categorized into White-box and Black-box approaches, each with distinct strategies for knowledge transfer.

- White-box Knowledge Distillation: In White-box KD, access to the teacher LLM’s predictions and parameters allows for a deeper understanding and replication of the teacher’s knowledge in smaller models. Techniques like MINILLM and GKD exemplify this by refining sample quality and handling distribution mismatches to improve student model performance.

- Black-box Knowledge Distillation: Black-box KD solely accesses teacher predictions and leverages emergent abilities like In-Context Learning (ICL), Chain-of-Thought (CoT), and Instruction Following (IF) to enhance smaller models. Techniques such as TF-LLMD and CoT-based strategies tap into these abilities to boost adaptability and efficiency in resource-constrained models, harnessing the strengths of large models to benefit smaller ones in real-world applications.

Low-Rank Factorization

Low-Rank Factorization is a compression technique that breaks down weight matrices into smaller components to approximate the original matrix. It involves decomposing a large matrix into two smaller matrices, reducing parameters and computational complexity. In the realm of LLMs, methods like TensorGPT use Tensor-Train Decomposition (TTD) to compress embeddings effectively, achieving significant compression in the embedding layer while often maintaining or even enhancing overall model performance compared to the original LLM architecture.

These low-rank factorization techniques, as seen in TensorGPT’s TTD-based approach, are crucial for adapting LLMs to resource-constrained environments without sacrificing performance. This method ensures that advanced language models can be deployed efficiently on edge devices, improving accessibility and enabling practical implementation in scenarios with limited computational resources.

The journey through model compression techniques for Large Language Models (LLMs) unveils a spectrum of strategies aimed at overcoming their size and resource-intensive nature. From pruning and quantization to knowledge distillation and low-rank factorization, these methods offer transformative pathways toward making LLMs more accessible and environmentally friendly.

These approaches, each with their nuances, address the complexities of reducing LLM size while maintaining performance. Pruning streamlines model connections, quantization simplifies representations, and knowledge distillation transfers insights efficiently. Low-rank factorization, as seen in TensorGPT’s TTD-based approach, achieves impressive compression without compromising functionality.

The critical realization is that model compression isn’t merely about size reduction but about crafting resource-efficient, high-performing LLMs. These innovations pave the way for practical deployment in varied environments, ensuring that the power of advanced language models can be harnessed even in settings with limited computational resources. As these methods evolve, they promise a future where cutting-edge language processing capabilities are within reach, facilitating their integration across diverse applications.