Introduction

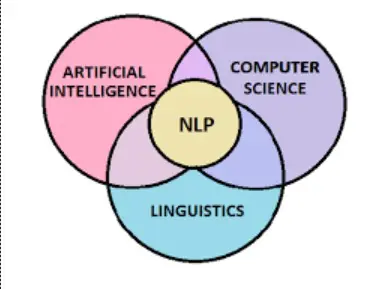

Natural Language Processing (NLP) is a fascinating field that bridges the gap between human language and computers. A crucial step in NLP is text preprocessing, which prepares raw text for analysis by cleaning and structuring it. This blog will delve into three fundamental preprocessing techniques: tokenization, lemmatization, and stemming. Understanding these techniques is essential for anyone looking to work with textual data effectively.

Why Text Preprocessing is Important

Before diving into the techniques, it’s essential to understand why preprocessing is necessary:

- Noise Reduction: Raw text data often contains noise such as punctuation, special characters, numbers, and irrelevant words that can hinder analysis. Preprocessing helps remove this noise.

- Standardization: Preprocessing ensures that text data is standardized, making it consistent and easier to work with.

- Feature Extraction: Properly preprocessed text allows for more effective feature extraction, which is critical for machine learning and NLP tasks.

Tokenization

What is Tokenization?

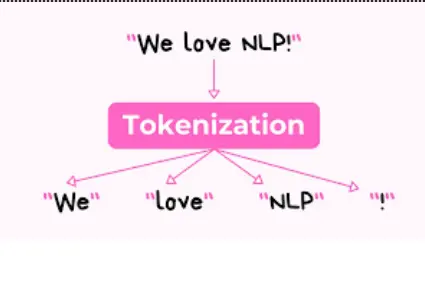

Tokenization is the process of splitting text into smaller units called tokens. Tokens can be words, phrases, or even individual characters. Tokenization is the first step in text preprocessing and lays the foundation for further analysis.

Types of Tokenization

- Word Tokenization: Splitting text into individual words.

- Sentence Tokenization: Splitting text into individual sentences.

- Subword Tokenization: Splitting words into smaller units, which is particularly useful for dealing with compound words or words with prefixes and suffixes.

How Tokenization Works

Tokenizers typically use delimiters like spaces and punctuation marks to identify boundaries between tokens. More advanced tokenizers can handle complex cases like contractions and hyphenated words.

Examples and Code Snippets

Here’s how you can perform tokenization using the NLTK library in Python:

import nltk

from nltk.tokenize import word_tokenize, sent_tokenize

text = “Natural Language Processing (NLP) is a fascinating field.”

word_tokens = word_tokenize(text)

sentence_tokens = sent_tokenize(text)

print(“Word Tokens:”, word_tokens)

print(“Sentence Tokens:”, sentence_tokens)

Lemmatization

What is Lemmatization?

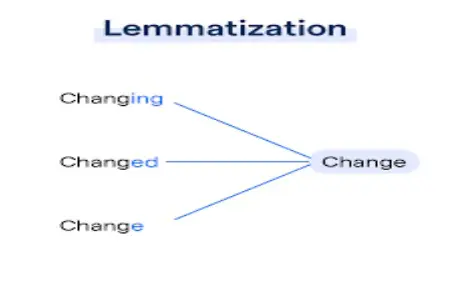

Lemmatization is the process of reducing words to their base or dictionary form, known as lemmas. Unlike stemming, which merely chops off prefixes and suffixes, lemmatization considers the context and converts words to their meaningful base forms.

How Lemmatization Works

Lemmatizers use a vocabulary and morphological analysis of words to remove inflections and return the base or dictionary form of a word. For example, the words “running” and “ran” are both reduced to the lemma “run”.

Lemmatization vs. Stemming

Lemmatization is more accurate than stemming because it returns valid words that exist in the language. While stemming might produce non-existent words, lemmatization always returns a valid word.

Examples and Code Snippets

Here’s how you can perform lemmatization using the NLTK library in Python:

from nltk.stem import WordNetLemmatizer

lemmatizer = WordNetLemmatizer()

words = [“running”, “ran”, “runs”, “easily”, “fairly”]

lemmatized_words = [lemmatizer.lemmatize(word) for word in words]

print(“Lemmatized Words:”, lemmatized_words)

Stemming

What is Stemming?

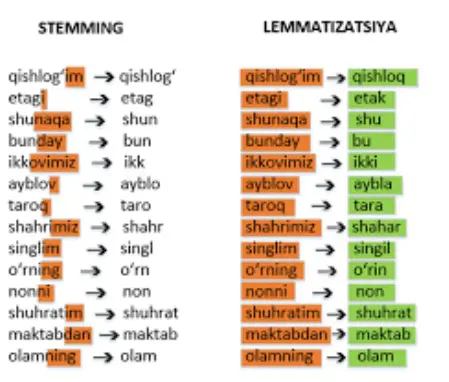

Stemming is the process of reducing words to their root form by removing suffixes and prefixes. It is a heuristic process that often results in words that are not valid English words.

How Stemming Works

Stemmers apply a set of rules to strip suffixes and prefixes. This process is simpler and faster than lemmatization but can be less accurate.

Common Stemming Algorithms

- Porter Stemmer: One of the oldest and most widely used stemming algorithms.

- Lancaster Stemmer: A more aggressive stemming algorithm compared to the Porter Stemmer.

- Snowball Stemmer: An improved version of the Porter Stemmer, designed to be more efficient and handle more languages.

Examples and Code Snippets

Here’s how you can perform stemming using the NLTK library in Python:

from nltk.stem import PorterStemmer, LancasterStemmer

porter = PorterStemmer()

lancaster = LancasterStemmer()

words = [“running”, “ran”, “runs”, “easily”, “fairly”]

porter_stemmed = [porter.stem(word) for word in words]

lancaster_stemmed = [lancaster.stem(word) for word in words]

print(“Porter Stemmed Words:”, porter_stemmed)

print(“Lancaster Stemmed Words:”, lancaster_stemmed)

Comparison of Lemmatization and Stemming

- Accuracy: Lemmatization is more accurate as it considers the context and returns valid words. Stemming is faster but less accurate and can produce non-existent words.

- Speed: Stemming is faster due to its simple rule-based approach. Lemmatization is slower as it requires a look-up in a dictionary.

- Use Cases: Lemmatization is preferred for tasks requiring precise language understanding, such as chatbots and machine translation. Stemming is suitable for search engines where speed is crucial, and exact word forms are less important.

Conclusion

Text preprocessing is a vital step in NLP, setting the stage for more advanced analysis. Tokenization breaks down text into manageable pieces, lemmatization reduces words to their base forms, and stemming reduces words to their root forms. Each technique has its strengths and applications, and understanding them is crucial for any NLP practitioner. By mastering these preprocessing techniques, you can ensure that your textual data is clean, consistent, and ready for analysis.