Pretrained LLMs are generic language models that have learned to predict the next word in a sentence based on a broad spectrum of text data. While they possess a general understanding of language, they need fine-tuning to perform specific NLP tasks effectively. The reasons for fine-tuning include:

- Task-Specific Performance

- Domain Adaptation

- Data Efficiency

Strategies for Fine-Tuning LLMs: A Deep Dive

Selecting the Right Pretrained Model

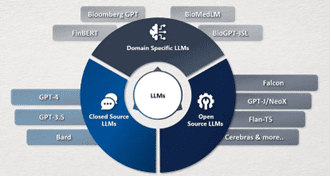

The first step is choosing a pretrained LLM that matches the size and domain of your task. Models like BERT, GPT-3, RoBERTa, and T5 come in various sizes and are pretrained on different datasets. Selecting the right one is critical.

Data Preparation

You need to preprocess your task-specific data to match the format that the pretrained model expects. This may include tokenization, padding, and data augmentation if your dataset is small.

Hyperparameter Tuning

Fine-tuning often requires adjusting hyperparameters such as learning rate, batch size, and the number of training epochs. Experimentation is crucial to find the right combination for your task.

Loss Function Selection

Depending on the task, you may need to choose an appropriate loss function. For instance, cross-entropy loss is commonly used for classification tasks, while mean squared error may be used for regression tasks.

Layer Freezing

Depending on your task, you can choose to freeze some layers of the LLM during fine-tuning. This can be especially useful when you have a limited amount of task-specific data.

Early Stopping

Implement early stopping to prevent overfitting. Monitor the validation loss during training and stop when it starts to increase.

Transfer Learning

Leverage transfer learning to fine-tune models across multiple related tasks. This can save time and resources by reusing knowledge from previous tasks.

Ensemble Learning

You can combine multiple fine-tuned LLMs to create an ensemble model, which often leads to better performance. Each model in the ensemble may be fine-tuned for a different aspect of the task.

Data Augmentation Techniques for Effective Fine-Tuning

Back-Translation

Translating your text data into another language and then back to the original language can introduce textual variations and generate additional training examples.

Text Generation

Using your pretrained LLM to generate new text based on your existing data can expand your dataset with semantically similar content.

Synonym Replacement

Replacing words or phrases with synonyms can create variations in the training data.

Sentence Shuffling

Shuffling the order of words or sentences in a document can help the model learn to generalize better.

Domain Adaptation: Tailoring LLMs for Specialized Tasks

Specialized Vocabulary

Introduce domain-specific vocabulary and terminology into the training data to help the model understand and generate domain-specific content.

Preprocessing Rules

Develop custom preprocessing rules to handle domain-specific text, such as legal documents, medical reports, or financial statements.

Transfer Learning from Related Domains

If you have limited domain-specific data, you can transfer knowledge from a related domain and then fine-tune for your target domain.

Multimodal Fine-Tuning: Beyond Text

Data Fusion

Combining textual and non-textual data in a way that allows the model to effectively learn from and generate content across modalities.

Preprocessing for Non-Text Data

For images or audio, preprocessing steps like feature extraction and alignment with text may be necessary.

Model Architectures

Different LLM architectures are emerging to handle multimodal data, and selecting the right architecture is crucial.

Fine-Tuning for Low-Resource Languages: Overcoming Challenges

Cross-Lingual Transfer Learning

Leveraging pretrained models in a high-resource language to improve performance on low-resource languages.

Data Generation

Employing techniques like parallel text collection and machine translation to generate training data for low-resource languages.

Transfer of Linguistic Features

Using linguistic features shared across languages can aid fine-tuning on languages with limited data.

Addressing Bias and Fairness in Fine-Tuned Models

Bias Evaluation

Conducting bias assessments to identify and mitigate biases in the fine-tuned model.

Fairness Metrics

Defining and measuring fairness metrics to ensure that the model’s outputs are not unfairly biased against certain groups or demographics.

Data Cleaning and Augmentation

Careful data curation, cleaning, and augmentation can help mitigate bias in training data.

Interpretability and Explainability: Unraveling LLM Decisions

Attention Maps

Visualizing attention maps in LLMs to see which parts of the input are most relevant to the model’s decisions.

Explainable Models

Using explainable models like LIME or SHAP to generate interpretable explanations for LLM predictions.

Domain-Specific Metrics

Defining domain-specific interpretability metrics that align with the needs of the application.

Ongoing Model Maintenance: Ensuring Long-Term Effectiveness

Continual Data Collection

Collecting and annotating new data to ensure the model stays relevant and effective.

Regular Re-Fine-Tuning

Periodic re-fine-tuning to adapt to evolving data distributions and task requirements.

Versioning and Deployment

Proper versioning and deployment procedures to ensure that models are updated without disrupting production systems.

Challenges and Considerations in Fine-Tuning LLMs

Data Annotation

Collecting and annotating task-specific data can be time-consuming and costly. This is a critical step in the fine-tuning process.

Data Imbalance

Imbalanced datasets can lead to biased models. Careful sampling and weighting of the training data are necessary to address this issue.

Ethical Concerns

Fine-tuning on biased or inappropriate data can lead to models that generate biased or harmful outputs. Ethical considerations should guide the selection and usage of data.

Resource Requirements

Fine-tuning large LLMs can be computationally expensive and may require access to powerful hardware and infrastructure.

Fine-Tuning as a Dynamic Process in NLP Advancements

Fine-tuning LLMs is a powerful technique for adapting these pretrained models to specific NLP tasks. By following the right strategies and taking into account the challenges, you can harness the full potential of LLMs to achieve state-of-the-art results in various NLP applications. It’s important to remember that fine-tuning is not a one-size-fits-all process, and experimentation is key to finding the best approach for your specific task. As the field of NLP continues to advance, fine-tuning will remain a vital tool for customizing LLMs to meet the ever-evolving needs of language-related tasks.