AI breakthroughs and shifting customer behavior are rewriting the boundaries of what’s possible, and what’s expected in financial services. With these forces at play, success depends on a firm’s ability to adapt. Generative AI for financial services now plays a critical, practical role in the core operations of banks, asset managers and insurance providers. And, with compelling stories of results driven agentic AI for financial services from AWS re:Invent, it's fair to say that we're going to see more AI in financial services, not less.

Executives and technology leaders now see AI in financial services as essential for rethinking outdated processes, improving decision-making, managing risk, and meeting rising client expectations. As the industry embraces this shift, the organizations that leverage AI effectively will set the pace for innovation and operational excellence in the years ahead.

What is generative AI and why does finance need it?

Generative AI describes AI models that create new data, whether that’s text, numbers, images or ideas. In the world of finance, these systems are trained on large, complex financial data sets and have the ability to detect hidden patterns and relationships. This makes them especially useful for a highly regulated, data-reliant activity in the world of finance.

For instance, here are some critical tasks that AI can solve for finance:

- Automation of complex tasks: Functions like loan review, fraud detection, or customer onboarding that used to take days now run in minutes.

- Sharper decision-making: AI models analyze trends and risks at scale, augmenting analysts, portfolio managers, and underwriters.

- New product development: AI can design customized investment plans or tailor insurance offerings at the level of the individual client.

How is generative AI impacting financial services?

Several operations in finance which were once manual and time-consuming are now streamlined, scalable and insight-driven.

Fraud detection and risk management

Banks and payment firms face constant threats from fraud and operational risk. AI models can spot abnormal activity across millions of transactions, flagging fraud in real time and learning from new patterns as they emerge. Over time, this leads to fewer false alarms and a stronger risk posture.

Personalized financial advice

Financial advisors and robo-advisors are now using AI to craft highly personalized portfolios and give tailored recommendations. This helps more clients receive high-level advisory services, even if they aren’t ultra-high-net-worth.

Streamlined compliance and reporting

Compliance and regulatory reporting have always been resource-intensive. Generative AI automates report generation, monitors transactions for anomalies, and ensures institutions keep pace with complex, shifting regulations, all while reducing errors and manual workloads.

Better customer service

24x7 AI chatbots can now answer routine client questions instantly and escalate high-value or complex requests to human agents. Over time, every interaction helps the system learn and respond better, lifting overall customer satisfaction.

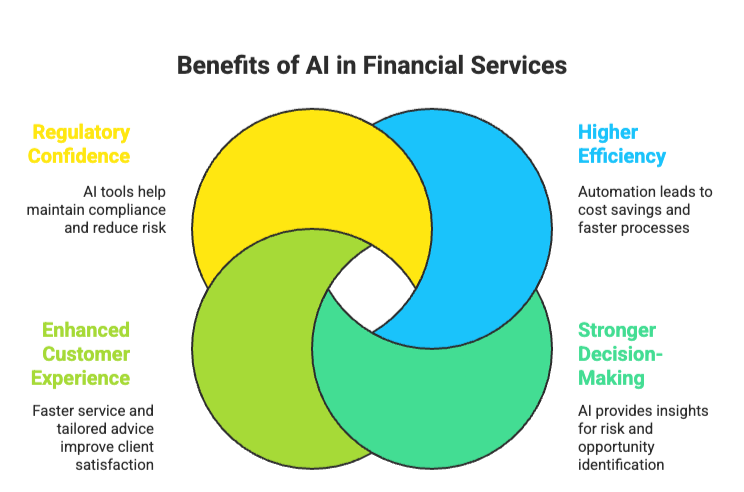

What are the key benefits of AI for financial services?

- Higher efficiency: More automation means lower costs, faster turnaround, and freed-up human talent for higher-value work.

- Stronger decision-making: AI surfaces insights you might have missed, helping you spot risks and opportunities early.

- Enhanced customer experience: Clients enjoy faster service, tailored advice, and consistent support.

- Regulatory confidence: Modern AI tools reduce risk and help institutions stay compliant.

What are some real-world applications of AI for financial services?

Many financial organizations work with leading gen AI development companies like GoML to turn AI into tangible business value. Here are a few examples:

1. AI-powered document querying chatbot

Corbin Capital needed a smarter, faster way to analyze large volumes of financial documents. GoML built a generative AI-powered chatbot that now helps staff find answers in seconds, making research, audit and compliance processes much more efficient. This solution not only speeds up day-to-day operations but also reduces the margin for error when handling sensitive information.

2. AI-powered insurance claims automation at a major IT services provider

For one of the world’s largest IT service companies, GoML created a claims settlement solution powered by advanced language models. Integrating tools like Claude-v2 and AWS Bedrock, the system automates extraction and processing of insurance documents. The results are significant, like a jump in ‘straight-through claim processing’ and a clear reduction in ‘manual support’ costs. This is a clear example of AI for financial services in action, making insurance operations quicker and more accurate.

3. Automated investment intelligence

Venture capital and private equity funds often need fast, reliable insight into potential investments. GoML partnered with VantagePoint Fund to develop Addy, an AI solution powered by GPT-4. Addy pulls insights from databases, podcasts, and expert interviews to answer detailed queries about scaling, risk, and opportunity in AI startups. The results are quicker access to relevant information and improved decision-making for fund managers and analysts.

2026: The year of AI agents in financial services

Banks and insurers on AWS are laying the groundwork for agents that complete tasks end-to-end. Clean data. Strong access controls. Clear governance. Fast ML pipelines. These foundations let teams run agents with confidence inside regulated ecosystems.

Many GoML clients are already moving in this direction. A simple document-processing flow today can evolve into an autonomous claims or onboarding pipeline. The architecture is already flexible enough to support memory, multi-step logic and controlled tool calls. The transition is no longer theoretical, anymore.

Leveraging a mesh of reusable agents

In one prominent innovation talk session, Allianz Technology explained how they built a model-agnostic, multi-layer framework that avoids the classic trap: one bot per use case. That pattern works at first, then collapses under its own complexity.

Allianz moved to a Gen AI mesh, using:

- Reusable agents

- A marketplace-like registry

- Clear orchestration layers

- Plug-and-play components

- Full observability

- Audit trails that regulators can trust

This stops agent proliferation and keeps the environment scalable.

Their use cases were practical which supported activities like automated claims, risk scoring, fraud detection, payout decisions or things like internal workflow routing. All powered by a library of agents that can be mixed and matched.

GoML similarly has built claim-triage models, fraud-flagging pipelines and underwriter-support tools that already operate as independent components. With a mesh setup, they can become orchestrated agents with shared memory, consistent rules and reliable handoffs.

That shifts us from “a set of tools” to “a coordinated system that can run end-to-end financial processes”.

Trust and standards will decide who scales

Both AWS and Allianz repeated one theme: trust. Without trust, there can be no meaningful agents.

That means:

- Reproducible behavior

- Secure deployment

- Strong identity and access

- Consistent policies

- Audit trails regulators can review

- Model independence

- Clear separation of business logic and decision logic

Amazon Bedrock with its multi-prong functionality shall serve as the foundation for secure, policy-driven agent deployment. The message was simple. If you cannot prove how an agent behaved at 2:13 PM on a random Tuesday, you will not be allowed to scale it.

For our clients, this is the make-or-break requirement. Especially in lending, risk, and insurance operations. Many current deployments already track decision data, model inputs, and execution logs. A Bedrock-style agent layer simply strengthens what we have: more transparency, more traceability and stronger compliance posture.

Payments will change: Agents need a native way to transact

Coinbase presented X402, an open, internet-native payment standard designed for machine-to-machine commerce. Today’s payment systems assume a human somewhere in the loop. Agents break that model.

X402 supports:

- micro and macro payments

- stablecoin-based settlement

- autonomous spending with guardrails

- instant settlement

- low fees

- programmable controls

This matters for any agent system that needs to buy data, access APIs, or trigger a transaction without waiting for a human.

We build agentic frameworks in financial services, making this kind of operational standard extremely relevant. Think of an underwriting agent that pays for external bureau data in real time. Or a trading-support agent that buys premium signals. Or a claims-assessment agent that triggers a controlled micro-payout.

These are realistic paths once payment rails evolve.

From isolated bots to governed, orchestrated systems

If you want AI to run critical financial processes, you need three things:

- A mesh architecture that prevents chaos as agents multiply.

- Governance and observability so every decision is traceable.

- Open standards for both communication and payments.

This matches what we already see with GoML clients. Everyone starts with one or two use cases. Then they want agents to talk to each other. Then they want shared context. Then they want to trigger actions. The only sustainable path is a governed, orchestrated approach.

Agentic AI is more of an architecture layer than a feature. The companies that build this foundation early will run circles around those still stitching together pilots.

What are the main challenges in implementing AI agents for financial services?

Getting AI implementation right in a vertical like finance is a crucial part of leveraging its potential. Here are some challenges involved in the process:

Data security and privacy

Protecting sensitive customer and financial data against breaches is critical. Institutions must enforce strong encryption, access controls, and compliance with regulations to maintain client trust and meet legal requirements.

AI bias and fairness

AI models can inherit and amplify biases found in historical financial data, leading to unfair outcomes. Continuous review and monitoring are needed to ensure decisions are equitable and compliant.

Explainability and transparency

Many AI models act as black boxes, making decisions hard to interpret. Financial institutions must use models and tools that offer clear, auditable explanations to satisfy regulatory and internal demands.

Regulatory clarity and compliance

Navigating evolving and sometimes ambiguous regulations on AI use poses a challenge. Institutions need proactive legal and compliance oversight through every phase of AI adoption.

Human oversight and accountability

AI can support, but not replace, judgment in high-stakes settings. Defining clear responsibility for AI-driven outcomes, along with robust human review, is essential for trust and risk management.

These challenges must be addressed to ensure that AI delivers secure, fair, and effective outcomes in financial services.

As models mature, the financial services industry will see new business models, deeper client relationships and more agile operations.

Want to see the impact for your financial organization? GoML is a leading Gen AI development company, that helps you implement cutting-edge solutions quicker, in a safe manner and at scale. Reach out to us today.