Embarking on a journey into time series analysis reveals its ubiquity in diverse realms. It is defined as an ordered sequence of values that are usually equally spaced over time. Picture a car’s trajectory unfolding over time, each moment a step forward in latitude and longitude.

Like the iconic Moore’s law, similar patterns govern stock prices, weather predictions, and historical trends. Time series, a dynamic tapestry of data, allows us to decipher the car’s path and the rhythm underlying countless phenomena, unlocking insights that shape our understanding of the world.

Analysing using Time Series

Univariate Time Series Analysis:

Univariate time series analysis involves studying the changes in a single variable over time. In the context of car paths, this could mean examining the evolution of either latitude or longitude at each time step. By focusing on a single dimension, we can gain insights into the overall patterns and trends of the car’s movement.

Consider a scenario where time step zero represents a particular latitude and longitude. As subsequent time steps unfold, the values of either latitude or longitude change, forming a univariate time series. Analyzing this data allows us to identify key patterns, such as acceleration, deceleration, stops, and turns.

Multivariate Time Series Analysis:

Multivariate time series analysis involves studying the changes in multiple variables over time. In the context of car paths, this could mean simultaneously examining latitude and longitude at each time step. By considering multiple dimensions, we can uncover more nuanced insights into the dynamics of the car’s journey.

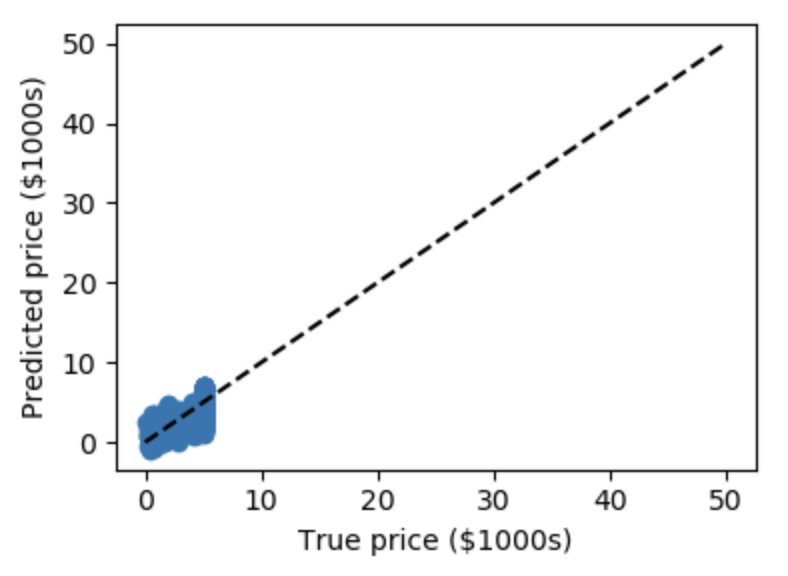

For instance, the actual performance of the function from the dataset is predicted following the available data, while the actual performance may vary according to additional factors.

Analyzing these variables together allows us to identify specific routes and understand how changes in one dimension may influence the other.

Univariate and Multivariate Time Series Analysis :

The true power of analyzing car paths lies in combining both univariate and multivariate time series analysis. This hybrid approach provides a holistic understanding of the journey, considering both the overall patterns and the intricate relationships between different variables.

Consider the path of a car as it travels. A time step zero is at a particular latitude and longitude. As subsequent time steps, these values are changed based on the path of the car.

Machine Learning applied to Time Series

Prediction or Forecasting:

Time series prediction involves foreseeing future values based on past data patterns. It’s akin to anticipating the next note in a melody by understanding the previous ones. Through mathematical models and statistical analyses, we project upcoming points in the time series, aiding in informed decision-making and proactive planning.

Imputation:

Time series imputation is like filling in the gaps. When data points are missing in a timeline, imputation methods step in to estimate those values. It’s as if completing a puzzle using existing pieces to intelligently guess the ones that are absent, ensuring a more complete and coherent picture of the data.

Patterns in Time Series

1. Trend

A trend in time series indicates a clear overall direction of movement. It’s like identifying whether things are generally going up, down, or staying constant over time. In the context of Moore’s Law, the upward trend signifies a consistent and predictable increase, forming a distinctive trajectory in the data.

2. Seasonality

Seasonality in time series refers to recurring patterns that unfold at predictable intervals. Imagine a rhythm, like the ebb and flow of waves, but in data. In a chart of active users on a website for software developers, the regular dips represent a consistent cycle, possibly influenced by specific times of the day, week, or month. It’s the heartbeat of a recurring pattern within the data.

3. Auto Corelation and Noise

Auto-correlation:

- Auto-correlation involves examining the relationship between a time series and its lagged values. In simple terms, it’s like checking if the current value is somehow dependent on its past values.

- Positive auto-correlation implies a repeating pattern, while negative auto-correlation suggests an inverse relationship. This analysis helps uncover temporal dependencies and forecast future trends.

Noise:

- Noise in time series refers to random fluctuations or irregularities that obscure meaningful patterns. It’s like the static on a radio channel that distorts the signal. Identifying and mitigating noise is crucial for accurate analysis. Removing noise reveals the underlying structure of the data, allowing us to focus on genuine trends and patterns enhancing the reliability of predictions.

Unveiling Time Series Forecasting: Training Insights

Naive Forecasting:

In the world of time series forecasting, a simple start is to assume the next value will be the same as the last one. This method, called naive forecasting, sets a baseline for our predictions. Surprisingly effective, it provides a straightforward perspective on how well our model can perform.

Measuring Performance:

To gauge our forecasting model’s effectiveness, we divide the time series into training, validation, and test periods. This fixed partitioning ensures a fair evaluation. For a seasonality-infused time series, aligning periods with complete seasons (like a full year) is crucial to avoid biases.

Training and Evaluation:

The training phase involves fine-tuning the model’s architecture and hyperparameters using the training period. Evaluation on the validation set helps optimize performance. Retraining with both training and validation data often enhances the model’s predictive capabilities.

Test Set Importance:

The test period, closest to the current time, holds a powerful signal for predicting future values. Some methodologies skip a test set, relying on training and validation data and leaving the test set for future evaluation. This approach ensures the model is optimal for real-time scenarios.

Roll-Forward Partitioning:

Alternatively, roll-forward partitioning involves iterative training, gradually increasing the training period. Forecasting the following day or week in the validation period refines the model continually. While our focus in this course is on fixed partitioning, understanding this dynamic approach is valuable.

By unraveling these concepts, we gain practical insights into training time series forecasting models, empowering us to navigate the intricate dynamics of past data to predict the future with confidence.

Metrics Unveiled: Evaluating Time Series Performance

1. Errors:

Errors = forecasts - actualIt is the difference between the forecasted values from our model and the actual values over the evaluation period.

2. Mean Squared Error (MSE):

mse = np.square(errors).mean()Evaluating the performance of a time series forecasting model involves understanding the difference between forecasted and actual values. The Mean Squared Error (MSE) is a common metric calculated by squaring the errors and finding their mean. Why square? It eliminates negative values, ensuring errors don’t cancel each other out. This method provides a comprehensive view of overall model accuracy.

3. Root Mean Squared Error (RMSE):

rmse = np.sqrt(mse) To maintain the original scale of errors, the square root of MSE gives us the Root Mean Squared Error (RMSE). This metric often preferred for interpretability, represents the average magnitude of forecasting errors. A smaller RMSE indicates a more accurate model, making it a valuable tool for performance assessment.

4. Mean Absolute Error (MAE):

mae = np.abs(errors).mean()An alternative to MSE, Mean Absolute Error (MAE) or Mean Absolute Deviation (MAD) focuses on absolute values of errors, avoiding the squared term. It offers a clearer understanding of average forecasting inaccuracies without emphasizing large errors as much as MSE does. The choice between MAE and MSE depends on the relative impact of errors in your specific context.

5. Mean Absolute Percentage Error (MAPE):

mape = np.abs(errors / x_valid) .mean()For a percentage-based perspective on errors, Mean Absolute Percentage Error (MAPE) measures the mean ratio between absolute error and the absolute value. This ratio provides insights into error magnitude relative to the values in the dataset. MAPE is particularly useful when understanding the impact of errors in relation to the scale of the data.

In the intricate landscape of time series forecasting, we’ve traversed fundamental concepts shaping our grasp of data trends, patterns, and predictive modeling. From the structured sequences defining time series to dissecting car path analyses and integrating machine learning, we’ve delved into diverse applications and methodologies that elevate forecasting to a potent tool.

Our exploration extended to evaluating forecasting model performance through metrics like Mean Squared Error (MSE), Root Mean Squared Error (RMSE), Mean Absolute Error (MAE), and Mean Absolute Percentage Error (MAPE). Each metric offers a distinct lens on the precision and reliability of our predictions, allowing us to refine models according to specific data nuances.

As we conclude, synthesizing these concepts lays a sturdy foundation for those venturing into time series forecasting. Whether unraveling the rhythmic patterns in a car’s journey, predicting stock prices, or refining weather forecasts, the insights acquired empower us to unravel past data intricacies and confidently project into the future. Time series forecasting, blending mathematics, statistics, and machine learning, beckons us to unveil the concealed wisdom in the chronological progression of time, facilitating informed decisions and strategic planning.