Xception is a deep learning model that has gained popularity in the field of computer vision for its innovative architecture and impressive performance. Understanding the structural foundation of Xception is crucial for anyone looking to delve into the world of convolutional neural networks. In this blog post, we will explore the evolution of Xception, the core concept behind its design, the structure of its architecture, a comparative analysis with other models, its applications, and future directions for improvements.

A Brief Overview of Xception’s Evolution

Xception, introduced by Google Research in 2017, was a big step forward in deep learning. It fixed problems from older models and changed how we build neural networks. Named “Extreme Inception,” it was a big change from the usual way networks were made, aiming to work better and faster. It improved how we use deep learning for complex tasks in computer vision.

By using a new kind of math called depthwise separable convolutions, Xception made models run well without needing too much computer power. This made Xception a top model in AI and pushed others to make better models too. It’s still being studied and used today, showing that small changes can lead to big improvements in AI.

The Structure of the Xception Architecture

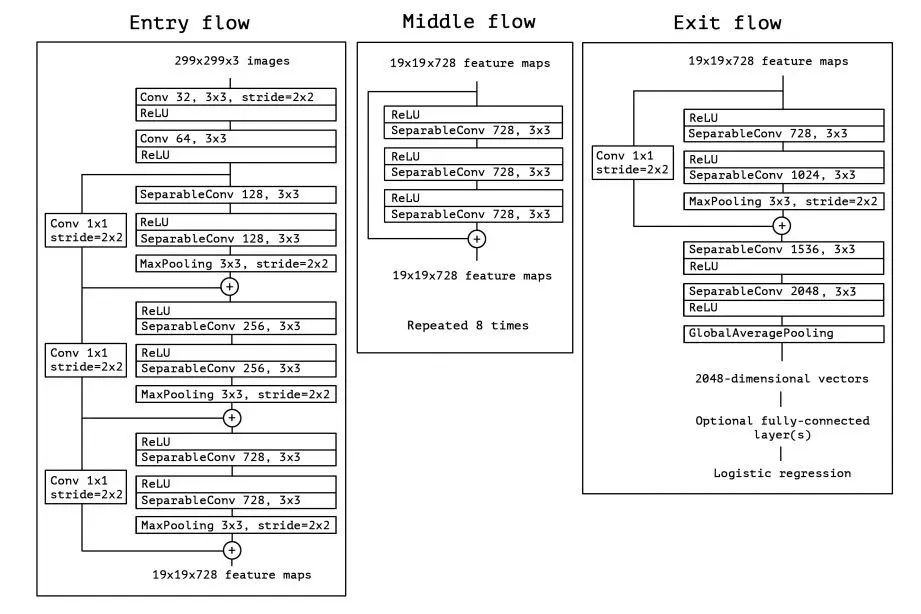

Delving into Xception’s anatomy reveals a meticulously designed framework leveraging depthwise separable convolutions for efficiency and high performance. Its 36 convolutional blocks are organized into entry, middle, and exit flows, each serving a specific function in feature extraction.

- The entry flow readies the model for complex patterns, increasing depth for detailed features.

- The middle flow repetitively refines data understanding without exponential computational growth.

- The exit flow aggregates processed data for classification. Convolutional blocks include depthwise separable convolutions, batch normalization, ReLU activation, and skip connections to prevent gradient issues.

The architecture transitions to global average pooling and a softmax classifier, reducing dimensionality for accurate predictions with lower computational demands. This strategic design sets new benchmarks in efficiency and performance for Xception.

Architecture Image:

Architecture of Xception

The Xception architecture consists of three main parts: the entry flow, the middle flow, and the exit flow.

1. Entry Flow

The entry flow is responsible for downsampling the input image while extracting essential features. It consists of the following steps:

- Initial Convolution: The input image is passed through a convolutional layer with 32 filters of size 3×3, followed by another convolutional layer with 64 filters of size 3×3.

- Residual Blocks: The next layers consist of three residual blocks, each containing depthwise separable convolutions. The number of filters increases progressively (128, 256, 728) to capture more complex features.

2. Middle Flow

The middle flow is the core of the Xception architecture and is designed to extract intricate features from the input image. It consists of 8 identical residual blocks, each containing three depthwise separable convolution layers. These blocks allow the model to capture complex patterns and relationships within the data.

3. Exit Flow

The exit flow is responsible for final feature extraction and classification. It consists of:

- Residual Blocks: Two residual blocks with depthwise separable convolutions. The number of filters decreases progressively (728, 1024, 1536, 2048) to refine the extracted features.

- Global Average Pooling: A global average pooling layer is used to reduce the spatial dimensions of the feature maps, resulting in a single vector per feature map.

- Fully Connected Layer: Finally, a fully connected (dense) layer is used for classification, with the number of units equal to the number of classes in the dataset.

Xception vs. Other Models

In the landscape of deep learning models, Xception differentiates itself through a unique balance of efficiency and accuracy, particularly when placed alongside its contemporaries like VGG and ResNet. The architecture of Xception, with its foundation in depthwise separable convolutions, allows for a dramatic reduction in the number of parameters without sacrificing the quality of feature extraction and analysis. This is a stark contrast to the VGG model, which, despite its simplicity and effectiveness in various computer vision tasks, is characterized by its extensive use of parameters that lead to significant computational demands.

On the other hand, ResNet introduced the concept of skip connections to alleviate the vanishing gradient problem in deep networks, enabling the training of models with unprecedented depth. While ResNet marked a significant leap forward in deep learning performance, Xception advances this by not only addressing the challenges of depth but also optimizing the convolutional operations themselves for greater parameter efficiency.

The innovation behind Xception’s approach lies in its ability to perform more targeted and efficient feature extraction.

By decoupling the mapping of cross-channel correlations and spatial correlations, Xception requires fewer computations for a comparable or even superior level of accuracy. This gives Xception a distinct edge in scenarios where computational resources is limited or where speed is a critical factor. Consequently, when evaluating models for their balance of computational efficiency and performance in extracting complex features from high-dimensional data, Xception emerges as a compelling choice, reflecting a significant stride in the evolution of convolutional neural networks.

Xception’s innovative use of depthwise separable convolutions and strategic architectural design sets it apart in the realm of deep learning models. Its balance of efficiency and accuracy, especially when compared to models like VGG and ResNet, makes it a compelling choice for various computer vision tasks. As we delve deeper into improving convolutional neural networks, Xception stands as a milestone in advancing both performance and computational efficiency, shaping the future of machine learning architectures.