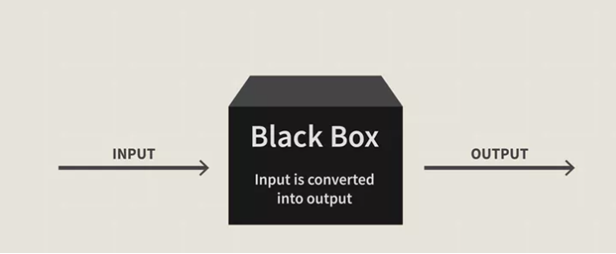

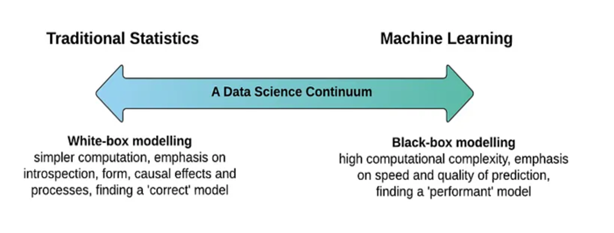

The divide between black box and white box models emerges as a critical determinant impacting the direction of innovation and implementation in the dynamic field of artificial intelligence (AI). This in-depth investigation delves into the complexities of black box models, providing a nuanced understanding with a focus on their application in AI chatbots, prompt engineering, and fine-tuning methodologies.

In the AI world, black box models bring both potential and challenges due to their opacity in disclosing internal workings. These models are used in the context of AI chatbots to improve conversational capabilities, making them capable of understanding and creating human-like responses. The investigation extends to the complexities of prompt engineering, where the art of query formulation plays a critical role in obtaining meaningful outputs from black box models. The delicate tango between input phrasing and model responsiveness is critical for optimising results in a wide range of applications.

Fine Tuning, a critical aspect of refining AI models for specific tasks, comes under scrutiny in the context of black box architectures. Unravelling the mechanisms behind adjusting pre-trained models to cater to domain-specific requirements sheds light on the adaptability of black box models, while also raising concerns about potential biases and ethical considerations.

Black Box Models: The Enigma of Decision-Making

Black box models, characterized by their internal opacity, serve as the foundational framework driving decision-making processes in artificial intelligence. The enigma surrounding AI chatbots, including renowned models like ChatGPT and BERT, deepens with the nuanced practice of prompt engineering, intensifying the inherent unpredictability of their responses.

Prompt engineering involves crafting specific queries or instructions tailored to extract particular information from these black box models. This procedural step introduces an additional layer of complexity, where subtle nuances or variations in input phrasing can yield markedly different outputs. In the realm of AI chatbots, particularly those leveraging advanced models such as BERT and ChatGPT, this phenomenon prompts a fascinating exploration into the unpredictable nature of their responses. The challenge lies in deciphering how these models interpret and process information, given the inherent obscurity of their decision-making mechanisms.

Adding to the mystique surrounding black box models is the process of fine-tuning, a crucial aspect in optimizing models like ChatGPT and BERT for specific tasks or domains. While fine-tuning enhances the adaptability of these AI models, it concurrently raises ethical concerns about biases and the potential reinforcement of existing societal disparities. Striking a delicate balance between optimizing performance and preventing the inadvertent amplification of biases within the training data is imperative when crafting algorithms designed to navigate complex scenarios.

In essence, the intricate nature of prompt engineering and fine-tuning underscores the complexities inherent in understanding and utilizing black box models, including prominent ones like ChatGPT and BERT. As the pursuit of responsible and ethical AI development continues, unravelling the intricacies of these models becomes pivotal in ensuring their application aligns with societal values and norms.

Cracking the Black Box Code: Navigating Ethical Challenges and Complexities

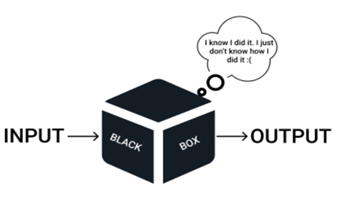

Major ethical considerations and challenges arise from the opacity of black box models in the dynamic field of artificial intelligence. As decision-making procedures get increasingly complex, it is critical to guarantee accountability and openness. Retrieval enhanced generation interacts to add another level of complexity, improving AI model skills but making decision-making more difficult.

Black box models present a two-pronged dilemma. Even if they make it possible to do difficult jobs and provide responses that are human-like, concerns regarding accountability, transparency, and ethical issues are very real. Understanding the particulars of black box models becomes essential as AI develops in order to build trust and guarantee the responsible development and application of these sophisticated technologies.In order to navigate the complicated world of AI decision-making and create a future where artificial intelligence helps society as a whole, it will be vital to find a balance between innovation and ethical concerns.

How can ethical rules be incorporated to address transparency and accountability challenges in black box models?

Answer: Integrating ethical rules is crucial for addressing transparency and accountability challenges, ensuring equitable distribution of AI benefits.

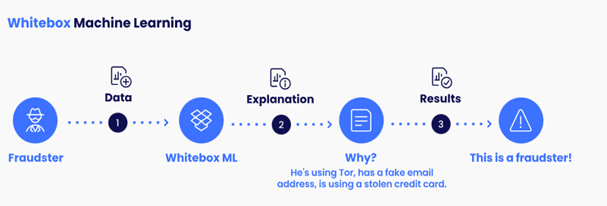

White Box Models: Transparency and Ethical Strides

In healthcare, interpretability transcends being a mere preference; it is often mandated. Healthcare professionals necessitate a comprehensive understanding of how AI-derived diagnoses and treatment recommendations are formulated. White box models become instrumental in instilling confidence by providing a transparent perspective on the variables influencing decisions. This transparency is not only pivotal for obtaining regulatory approvals but also addresses ethical concerns, ensuring that AI functions as a supportive tool in decision-making rather than an opaque and potentially unpredictable entity.

The challenge of reconciling the transparency intrinsic to white box models with the innovation encapsulated in black box models is intricate but navigable. Hybrid models, a synthesis of both white box and black box techniques, emerge as a promising solution. These hybrid models strategically integrate interpretable components into black box systems, presenting a nuanced approach that proves especially valuable in contexts where transparency is imperative, such as healthcare. This hybrid approach retains the pioneering advantages of innovation while concurrently ensuring the requisite transparency for crucial decision-making processes.

The delicate equilibrium between transparency and innovation prompts the consideration of which should take precedence under varying circumstances. In sectors where human lives are in jeopardy, such as healthcare, transparency invariably rises to the forefront due to ethical imperatives and the imperative to justify AI system decisions. Conversely, in domains where interpretability assumes a less critical role, the trade-off may lean towards embracing the efficiencies and advantages offered by black box models.

Black Box vs White Box Models:

Black box models excel in settings requiring adaptability for managing complex tasks but face challenges in transparency, especially in regulated industries. White box models, offering transparent decision pathways, prove valuable in businesses where understanding and justifying decisions are paramount, even though they may have limitations in handling intricate situations. The choice between black box and white box models hinges on the specific requirements and goals of the application or industry in question.

How does the intricate nature of decision-making in complex scenarios make black box models more suitable than white box models?

Answer: Black box models excel in complex decision-making by leveraging sophisticated algorithms that can discern intricate patterns and relationships within vast datasets. The inherent adaptability of black box models allows them to navigate intricate scenarios, producing more accurate and nuanced outcomes compared to the rule-based nature of white box models.

Adaptability to Dynamic Environments:

In what ways does the adaptability of black box models allow them to thrive in dynamic and rapidly changing environments?

Answer: Black box models, equipped with continuous learning mechanisms, demonstrate a remarkable capacity to dynamically adjust to evolving environments. This adaptability proves crucial in scenarios where conditions change rapidly, empowering black box models to make informed decisions in real-time. This contrasts with the rule-bound nature of white box models, which may face limitations in flexibly responding to dynamic changes.

How Does a Black-Box Machine Learning Model Work?

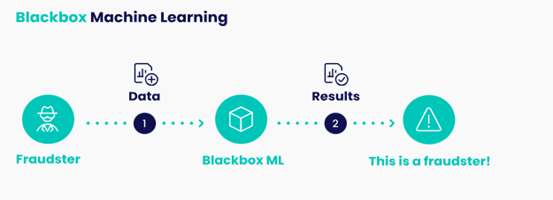

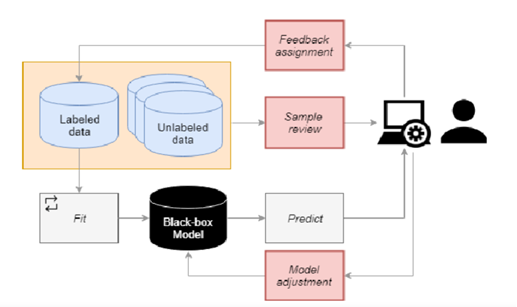

The prevalence of black-box machine learning algorithms surpasses that of white-box counterparts. These algorithms operate by assimilating sample data to identify patterns and anomalies, subsequently formulating rules for detecting and making decisions based on these patterns. In practice, they process data related to specific scenarios, utilizing the established rules to determine answers to relevant questions.

Step 1: Training the Algorithm with Sample Data

Initially, users provide the algorithm with extensive data relevant to the types of questions they intend to ask. This allows the algorithm to explore the data, identifying patterns and anomalies. During this process, the algorithm starts formulating rules dictating when to make or refrain from making specific distinctions.

Step 2: Asking a Question by Inputting Data Specific to a Real-World Scenario

In the subsequent step, users input data related to a particular real-world situation into the algorithm. The algorithm then employs its established rules to make decisions and generate a result.

Step 3: Getting a Result from the Algorithm

The black-box algorithm yields a result based on the provided data but maintains opacity regarding the decision-making process. Unlike white-box algorithms, it doesn’t disclose which decisions were made or the factors considered in making those decisions.

Step 4: Making Adjustments Based on Multiple Outcomes

The black-box machine learning model utilizes context-specific data to continuously learn and adapt beyond the initial training data. While users can make manual adjustments, the obscured nature of black-box processes makes this more challenging than with white-box models. Manual changes often involve running multiple tests with diverse scenarios to observe the model’s responses. Users then must make informed adjustments based on the outcomes, taking into account the slight alterations each scenario run can introduce to the algorithm.

Applications and Use Cases

In the ever-evolving realm of artificial intelligence, black box models have garnered widespread and impactful applications across various sectors, encompassing finance, healthcare, and engineering. Nevertheless, their adoption in industries characterised by a heightened need for accountability, such as finance, brings about distinctive challenges. Conversely, the advantages of deploying black box models in intricate engineering scenarios often overshadow these challenges, prompting a transformation in the approach to problem-solving and the development of solutions..

Buyer’s Decision-Making Process in Black Box Models: Unveiling the Purchasing Journey

Navigating the Complex Terrain of Consumer Decision-Making in Black Box Models

1. Recognition of Need:

The first phase, where consumers acknowledge a requirement, is comparable to the initiation of a cognitive spark.This awareness is often stimulated by a myriad of sources, ranging from targeted advertisements leveraging machine learning algorithms to personalised recommendations based on past behaviours. Understanding this stage requires acknowledging the influence of predictive modelling techniques that anticipate consumer needs before they are explicitly expressed.

2. Information Exploration:

As consumers embark on an information-seeking journey, the digital landscape becomes a vast terrain of exploration. Machine learning algorithms play a pivotal role in curating personalised content, ensuring that consumers are presented with information tailored to their preferences. Natural Language Processing (NLP) algorithms contribute to enhancing the efficiency of information retrieval, making the exploration phase more intuitive and user-centric.

3. Assessment of Alternatives:

In the era of advanced algorithms, the assessment of alternatives is marked by algorithmic decision-making tools that facilitate a comprehensive evaluation. Recommendation systems, powered by collaborative filtering or content-based filtering, aid consumers in comparing alternatives by presenting relevant options based on their preferences and behaviours. The synergy of algorithms orchestrates a dynamic and personalised evaluation process.

4. Decision to Purchase:

The decision to purchase is a culmination of algorithmic influence and consumer agency. Pricing optimization algorithms, often employed in e-commerce platforms, dynamically adjust prices based on demand, competitor pricing, and other variables, impacting the final decision. Additionally, sentiment analysis algorithms gauge online reviews, influencing consumer confidence and shaping their ultimate choice.

5. Post-Purchase Engagement:

In the aftermath of a purchase, post-purchase engagement is influenced by algorithms that facilitate personalised post-sale interactions. Customer Relationship Management (CRM) systems leverage machine learning to anticipate customer preferences, ensuring tailored after-sales experiences. The integration of chatbots, driven by Natural Language Understanding (NLU), enhances customer support, contributing to post-purchase satisfaction.

Technology is redefining how consumers make decisions when it comes to black box models. In order to design the future of retail and consumer experiences, efficiency, user happiness, and personalisation come together in a landscape created by the synergy between consumer demand and algorithmic expertise.

Black Box Models in Finance:

Challenges:

1. Interpretable Decision-Making

Finance demands transparent decision-making due to stringent regulatory requirements. The inherent opacity of black box models creates difficulties in providing clear explanations for decisions, raising concerns regarding regulatory compliance.

2. Ethical Considerations

Financial decisions wield substantial influence on individuals and markets. The potential for biases in black box models introduces ethical concerns, as decisions derived from these models may inadvertently impact individuals and communities.

3. Risk Assessment

The challenge lies in the inability to fully comprehend the decision-making process of black box models, hindering accurate risk assessment in financial transactions. Understanding the model’s rationale is crucial for effective risk management in the financial sector.

Benefits:

1. Pattern Recognition

Black box models excel in recognizing intricate patterns and anomalies in financial data. This capability is particularly advantageous for tasks such as detecting fraudulent activities, predicting market trends, and optimising investment strategies.

2. Adaptability

Financial markets are dynamic, and black box models, with their continuous learning and adaptation, can navigate the ever-changing landscape more effectively. This adaptability enhances their capacity to make informed decisions in response to evolving market conditions.

3. High-Volume Data Processing

Black box models demonstrate efficiency in processing large volumes of financial data, making them well-suited for tasks like algorithmic trading, portfolio optimization, and risk assessment. Their adeptness in handling massive datasets provides a competitive edge in the fast-paced financial industry.

What ethical issues arise due to potential biases in black box models employed for financial decisions?

Answer: The ethical concerns primarily revolve around the substantial repercussions that financial decisions can exert, given the potential biases inherent in black box models. The worry lies in the inadvertent impact on individuals and communities, prompting considerations of fairness and equity in financial systems.

Black Box Models in Engineering:

Why is it essential to comprehend the decision-making of black box models for ensuring safety and reliability in engineering applications?

Answer: In vital domains like aerospace or autonomous vehicles, guaranteeing safety and reliability hinges on a profound understanding of the decision-making processes inherent in black box models.

Challenges in Engineering Applications:

- Safety and Reliability:

In engineering applications, particularly those integral to critical infrastructure like aerospace or autonomous vehicles, prioritising safety and reliability is paramount. A comprehensive understanding of the decision-making processes embedded in black box models is essential to uphold the safety and reliability of these systems.

- Regulatory Compliance:

Engineering industries are subject to rigorous regulatory standards. The integration of black box models poses challenges as regulatory compliance often necessitates transparency in decision-making processes. Achieving this transparency can be intricate but is crucial for adhering to regulatory requirements.

- Human Oversight:

Black box models may operate in environments where human oversight is indispensable. Grasping how these models arrive at decisions is vital for engineers and operators. This understanding enables them to intervene or make informed decisions, especially in situations that deviate from the norm, ensuring a proactive approach to system management.

In what way does the lack of comprehension of the decision-making process of black box models affect risk assessment in the financial sector?

Answer: The challenge arises in accurately assessing risks associated with financial transactions because of the limited understanding of the rationale behind decisions made by black box models.

Benefits of Black Box Models in Engineering Applications:

- Complex Problem Solving:

Black box models demonstrate proficiency in solving complex engineering problems by discerning intricate patterns and relationships within vast datasets. This capability proves invaluable for tasks such as structural analysis, system optimization, and predictive maintenance.

- Optimizing Design Processes:

During the design phase, black box models assist engineers in optimising parameters, predicting performance outcomes, and identifying design flaws. This contributes to the streamlining of the engineering process, fostering the development of more efficient and innovative solutions.

- Efficient Resource Allocation:

Black box models play a crucial role in optimising resource allocation by analysing data related to energy consumption, material usage, and operational efficiency. This analytical capacity leads to more sustainable engineering practices and the implementation of cost-effective solutions.

In both finance and engineering, the incorporation of black box models demands a delicate equilibrium. This involves harnessing the benefits of advanced machine learning techniques while navigating the challenges posed by their inherent opacity.

How do black box models contribute to optimizing resource allocation in engineering, and in what way do they foster sustainable practices?

Answer: Black box models contribute to optimizing resource allocation in engineering by analysing data pertaining to energy consumption, material usage, and operational efficiency. This analytical capability leads to more sustainable engineering practices, ensuring efficient utilisation of resources.

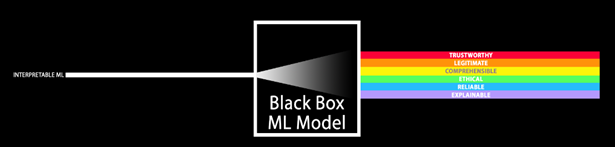

Exploring Explainable AI’s Opportunities in Black-Box Models for unparalleled Accuracy

Explainable AI (XAI) is a game-changer because it knows the complex world of black-box models, which are well-known for their accuracy but frequently criticised for their lack of transparency. Businesses may now maintain the accuracy of black-box models while obtaining important insights into the particular variables impacting predictions by utilising technologies like Lime and Shap.

Lime: Transforming the Darkness to Light

Lime, or Local Interpretable Model-agnostic Explanations, is a prominent player in the realm of black-box models. It breaks the mystery down to the smallest detail, giving each prediction an accurate representation. Lime creates trust by simplifying the complex decision-making process and providing stakeholders with useful insights.

Shap: The Value of Teamwork

Shapley Additive exPlanations (Shap) turns abstract models into a cooperative game that can be played in real life. Cooperative game theory is used to assign points to different characteristics of the input. Imagine features as participants in a game, each of them adding to the outcome that is predicted. Shap transforms complexity into a framework that is organised and easy to understand, making the abstract nature of black-box models understandable.

Explainable AI functions as a link between organisations and the insights concealed behind black-box models, utilising tools such as Shap and Lime. Explainable AI offers businesses the capacity to fully realise the potential of their models through transparency and interpretability, regardless of the algorithm used, such as Decision Trees, Linear Regression, or Logistic Regression. Lime and Shap are the universal keys that unlock any algorithm, empowering organisations to confidently make well-informed decisions.

In the ever-evolving landscape of artificial intelligence, the exploration of black box models, such as those found in autonomous vehicles, reveals a delicate equilibrium between innovation and transparency. The orchestration of keywords “autonomous driving,” “deep learning,” and “sensor fusion” paints a narrative where intricacy meets application across diverse industries.

The industry faces the challenge of navigating the shifting expectations of accountability and ethical decision-making. In sectors like healthcare, where AI applications impact patient well-being, and in finance, where consequences carry significant weight, transparency becomes imperative. The intricate dance of AI in these sectors necessitates a balance where the benefits of innovation coexist harmoniously with ethical considerations and regulatory compliance. The challenge lies in reconciling the power of black box models with the need for interpretability and accountability, ensuring responsible deployment that aligns with societal values.

The evolving expectations of accountability and ethical decision-making demand a continuous commitment to refining AI models, embracing transparency, and addressing biases. The intricate dance of black box models reflects the complexities of navigating a terrain where technology and responsibility intersect. As we peer into the future, the quest for a nuanced understanding of AI’s impact on society invites us to delve deeper, illuminating the path toward a more responsible and innovative integration of artificial intelligence into our daily lives.