Introduction

Vision-Language Pretraining (VLP) has emerged as a transformative approach in the AI world, merging two of the most powerful modalities: vision and language. By training models on both text and image data simultaneously, VLP models can perform complex tasks such as image captioning, visual question answering (VQA), and cross-modal retrieval. This blog will delve into the technical underpinnings of VLP, the architectures driving this field, and how it pushes the boundaries of multimodal learning.

1. What Is Vision-Language Pretraining (VLP)?

VLP involves training a model on paired image-text datasets so that it can understand and generate both visual and linguistic representations. Unlike traditional unimodal models, VLP can simultaneously learn the semantic relations in language and the spatial features in images.

Core Concepts

- Multimodal Input: Models are exposed to datasets containing aligned text and images, such as image descriptions or question-answer pairs.

- Cross-Modal Learning: The model learns how information from one modality (e.g., an image) correlates with another modality (e.g., a text description).

- Task-Agnostic Pretraining: Similar to LLMs’ pretraining on vast corpora of text, VLP models are pretrained on large-scale multimodal datasets and later fine-tuned on specific tasks.

2. Transformer Architectures in VLP

The underlying architecture in most VLP models is the transformer, originally designed for NLP. In the context of VLP, transformers are extended to handle both image and text modalities. Models like ViLBERT, UNITER, and CLIP have adapted transformers for joint vision-language representation learning.

Key Mechanisms

- Vision Encoders: These models typically use convolutional neural networks (CNNs) or vision transformers (ViTs) to encode visual information into feature vectors.

- Text Encoders: The language component is encoded using transformers, just like in LLMs such as BERT or GPT.

- Cross-Modal Attention: This mechanism allows the model to learn relationships between the two modalities by aligning text tokens with visual regions, enabling a deeper understanding of the data.

3. Popular VLP Models

ViLBERT (Vision-and-Language BERT)

ViLBERT extends BERT to process both visual and textual input. It consists of two parallel transformers: one for images and one for text. These transformers interact through cross-modal attention layers, allowing the model to learn relationships between the image and language representations.

UNITER (Universal Image-Text Representation)

UNITER takes a unified approach, jointly embedding both modalities into a single latent space. By aligning image regions with text tokens, UNITER achieves state-of-the-art performance on various tasks like visual reasoning and image-text matching.

CLIP (Contrastive Language-Image Pretraining)

CLIP uses a contrastive learning approach, training on a large-scale dataset of image-text pairs. Instead of directly modeling pixel-to-word relationships, CLIP focuses on learning shared representations between the two modalities, enabling it to generate descriptions for unseen images or find images matching a given textual description.

4. Training Techniques in VLP

Masked Language Modeling and Image Masking

Much like BERT uses masked language modeling to pretrain by predicting masked-out words, VLP models adopt similar techniques. For example, during training, certain image regions may be masked, and the model learns to predict the missing visual information based on the corresponding text and the unmasked parts of the image.

Contrastive Learning

In models like CLIP, contrastive learning is used to align image and text embeddings. This involves maximizing the similarity between paired image-text data and minimizing the similarity for mismatched pairs.

5. Applications of Vision-Language Pretraining

Visual Question Answering (VQA)

One of the most notable applications of VLP is visual question answering, where a model is given an image and a corresponding question and must generate an answer based on its understanding of both modalities.

Image Captioning

In image captioning, VLP models generate natural language descriptions of images, providing context and relevant details. This requires the model to understand both the visual content and the structure of natural language.

Cross-Modal Retrieval

VLP models are also employed in cross-modal retrieval tasks, where given a query in one modality (e.g., text), the model retrieves the most relevant counterpart in the other modality (e.g., an image).

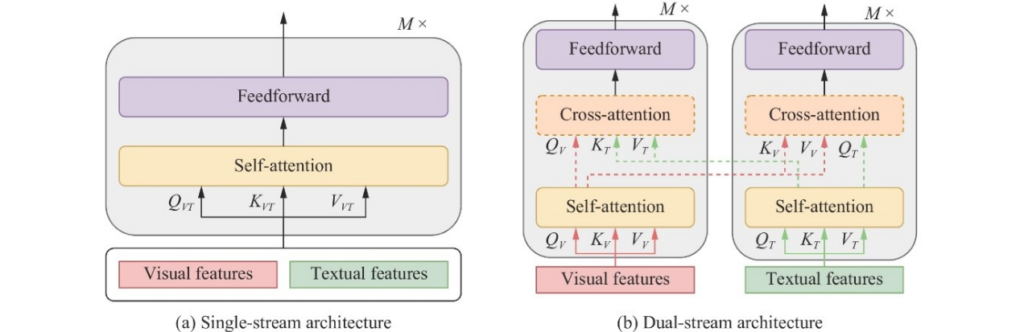

6.Single-Stream vs Dual-Stream Architectures in VLP

Single-Stream Architecture:

- Overview: Processes both image and text inputs within a shared transformer framework.

- Advantages: Facilitates seamless cross-modal interaction from the start, simplifies model architecture and training.

- Challenges: Can lead to modality imbalance and scalability issues with large input sizes.

Dual-Stream Architecture:

- Overview: Maintains separate processing pipelines for image and text, combining them through cross-modal attention.

- Advantages: Allows for specialized feature learning in each modality, better scalability with independent processing.

- Challenges: Adds complexity due to separate encoders and requires effective cross-modal fusion mechanisms.

7. Challenges in VLP

Data Alignment

One of the critical challenges in VLP is the availability of large, high-quality, aligned image-text datasets. For example, datasets like COCO or Flickr30k provide well-aligned pairs, but scaling beyond these requires more generalized datasets.

Computational Resources

Training VLP models is computationally intensive, requiring vast amounts of data, powerful GPUs or TPUs, and careful optimization techniques to make training feasible on large datasets.

Cross-Modal Fusion

Effectively fusing the modalities (text and image) in a way that preserves their unique characteristics while learning a joint representation is a complex problem. Different architectures (e.g., dual-stream vs. single-stream models) attempt to tackle this with varying degrees of success.

8. Future Directions in VLP

Multimodal Few-Shot Learning

As VLP models grow in capability, an emerging area of research is few-shot multimodal learning, where models can be adapted to new tasks with minimal data. This is particularly promising for domains like medical imaging, where labeled data is often scarce.

Integration with Speech and Video

While current VLP models focus on static images and text, future advancements will incorporate more dynamic modalities such as video and speech. This will open new avenues for applications like multimodal dialogue systems and video understanding.

Ethical Considerations

As with many AI systems, ethical considerations are critical in VLP. Issues like dataset bias, the generation of misleading visual-linguistic content, and privacy concerns need to be addressed as these models are deployed in real-world applications.

Conclusion

Vision-Language Pretraining (VLP) represents a significant step forward in the development of multimodal AI systems, allowing models to excel in tasks that require a deep understanding of both language and vision. With the rise of models like CLIP and ViLBERT, VLP is pushing the boundaries of what’s possible in AI, unlocking new capabilities across a wide range of applications. However, challenges in data alignment, computational efficiency, and ethical use remain, paving the way for future research and innovation.